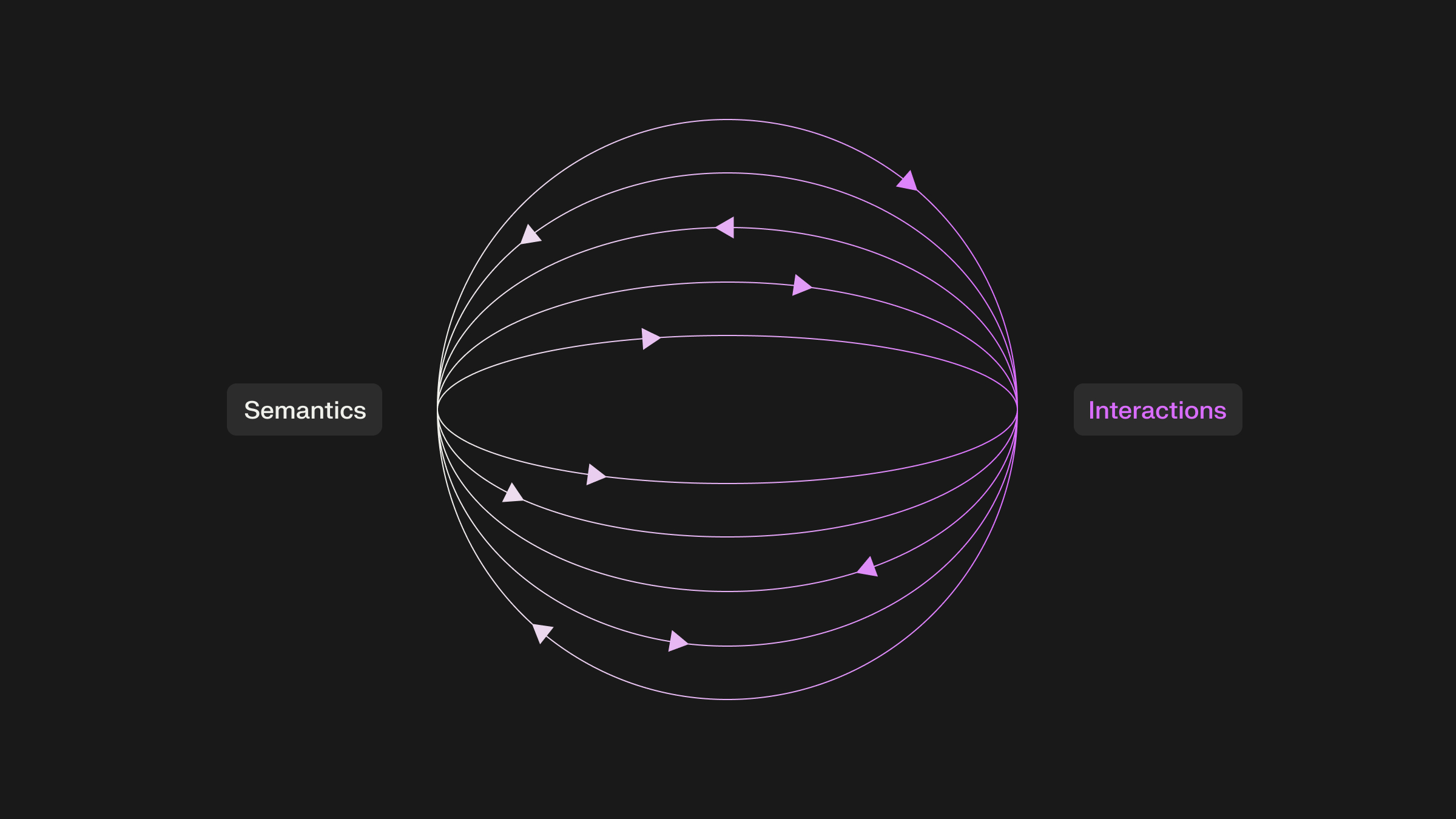

Modern recommendation systems grapple with two powerful but often distinct sources of information:

- Content Understanding (Semantics): Driven by the revolution in Natural Language Processing (NLP), large pre-trained language models (LLMs) and sentence Transformers excel at understanding the meaning embedded in item descriptions, titles, and tags. They know that "iPhone 15" and "Samsung Galaxy S24" are semantically similar (both are smartphones). This is invaluable for understanding item characteristics and handling cold-start scenarios where interaction data is scarce.

- User Behavior (Interactions): Collaborative Filtering (CF) techniques, from classic Matrix Factorization to modern autoencoders like ELSA/EASE, are masters at uncovering patterns in how users interact with items. They learn that users who buy an "iPhone 15" frequently also buy "AirPods", even though these items are semantically quite different. This captures complementary relationships, trends, and hidden user preferences crucial for relevance.

The challenge? Models excelling at one often struggle with the other. Pure LLM embeddings capture semantics but miss interaction patterns. Pure CF models capture interactions but fail on new items and don't leverage rich content information. How can we get the best of both worlds?

A compelling new direction aims to bridge this gap by fine-tuning powerful pre-trained language models using user interaction data. This approach seeks to create embeddings that are simultaneously aware of semantic meaning and behavioral patterns.

This post explores this exciting trend:

- The fundamental gap between semantic and interaction similarity.

- The core methodology: Teaching LLMs about user behavior.

- A concrete example: The beeFormer framework.

- The benefits and challenges of this hybrid approach.

- How platforms like Shaped enable these techniques.

The Semantic vs. Interaction Gap: Why It Matters

Imagine recommending accessories for a newly purchased smartphone:

- Semantic Similarity: An LLM embedding model would rank other smartphones (e.g., "Google Pixel") highly similar to "iPhone 15" because they share many features. It might rank "AirPods" or a "Phone Case" much lower.

- Interaction Similarity: A CF model, trained on purchase data, would likely rank "AirPods" and "Phone Case" very highly, as these are frequently bought together with an "iPhone 15". It wouldn't necessarily rank other smartphones high unless users specifically exhibited that switching behavior.

Neither view alone is complete. We need models that understand both that an iPhone is a phone and that people who buy iPhones often buy AirPods.

Bridging the Gap: The Core Idea - Fine-tuning LLMs on Interactions

The central idea is elegant: leverage the strong semantic priors learned by large pre-trained language models but adapt them to the specific nuances of user behavior within a recommendation context. The general process looks like this:

- Encoder (Language Model): Start with a pre-trained sentence Transformer (like MPNet, BERT variants, BGE, Nomic Embed, etc.). This model takes item text descriptions as input and outputs initial semantic embeddings (v_semantic).

- Decoder/Supervisor (Interaction Model/Loss): Use a mechanism derived from collaborative filtering that operates on user-item interactions. This component implicitly or explicitly defines what constitutes a "good" set of item embeddings from an interaction perspective. Examples include:

- Matrix Factorization loss terms.

- Autoencoder reconstruction loss (like in ELSA/EASE, aiming to reconstruct user interaction vectors).

- Contrastive losses based on positive/negative interaction pairs.

- Connect & Backpropagate: Feed the semantic embeddings (v_semantic) generated by the LLM encoder into the interaction-based decoder/supervisor. Calculate a loss based on how well these embeddings explain or predict user interactions.

- Fine-tuning: Critically, backpropagate the gradients from this interaction-based loss back through the decoder/supervisor and into the parameters of the original language model encoder.

The result? The language model learns to adjust its weights, shifting the embeddings it produces. The new embeddings (v_hybrid) are no longer purely semantic; they are nudged towards positions in the embedding space that better reflect observed user interaction patterns, while hopefully retaining much of their original semantic understanding. The LLM learns what "similarity" means in the context of user behavior on a specific platform or domain.

A Concrete Example: The beeFormer Framework

The beeFormer paper (Vančura et al., RecSys '24) provides a clear implementation of this idea:

- Encoder: Uses standard pre-trained Sentence Transformer models.

- Decoder/Supervisor: Leverages the training objective of ELSA, a scalable shallow linear autoencoder. ELSA learns item embeddings (A in the paper's notation) by minimizing the error in reconstructing user interaction vectors (

X_u) asX_u @ A @ A^T. - beeFormer Process:

- Generate initial item embeddings A using the Sentence Transformer from item descriptions.

- Compute the ELSA reconstruction loss using these embeddings A.

- Calculate the gradient of the ELSA loss with respect to the embeddings A.

- Backpropagate

- this gradient (

checkpointin their Algorithm 1) back into the Sentence Transformer model to update its weights.

- Scalability Solutions:

- To handle the massive "effective batch size" (all items needed for

A @ A^T), beeFormer uses:

- Gradient Checkpointing: Avoids storing intermediate activations for the full backward pass through the Transformer.

- Gradient Accumulation: Computes gradients for smaller batches and accumulates them before updating the Transformer weights.

- Negative Sampling / Batching: Only computes embeddings and gradients for items relevant to the current user batch (plus some negative samples), avoiding processing the entire item catalog in each step.

beeFormer demonstrated significant improvements over using purely semantic embeddings or standard CF methods, especially in cold-start, zero-shot, and time-split scenarios. It also showed successful knowledge transfer between datasets and benefits from training on combined multi-domain data, paving the way for potentially universal recommendation encoders.

Why This Matters: The Benefits

Combining semantic pre-training with interaction fine-tuning offers compelling advantages:

- ✅ Improved Cold-Start/Zero-Shot: Models inherit the LLM's ability to understand new items based solely on their text descriptions, but the embeddings are more relevant for recommendation tasks than purely semantic ones.

- ✅ Richer Embeddings: Captures both "is-a" relationships (semantic) and "bought-with" relationships (interaction).

- ✅ Knowledge Transfer: Pre-trained LLMs provide a strong starting point. Fine-tuning can potentially transfer learned interaction patterns across related datasets or domains.

- ✅ Potential for Universal Models: Training on diverse interaction datasets could lead to powerful, domain-agnostic recommendation encoders, as hinted by

beeFormer's multi-dataset experiments. - ✅ Leverages Existing Infrastructure: Builds upon well-established sentence Transformer libraries and architectures.

Building & Training: The Challenges

This approach is powerful but not without challenges:

- Data Requirements: Needs both high-quality textual descriptions for items and sufficient user interaction data for meaningful fine-tuning.

- Computational Cost: Fine-tuning large Transformer models is computationally expensive and requires significant GPU resources. The interaction-based loss calculation can also add overhead.

- Scalability:

- Efficiently handling the backpropagation through potentially millions of item embeddings requires careful engineering (as addressed by

beeFormer). - Hyperparameter Tuning: Requires tuning both the LLM parameters (learning rate, layers) and the interaction loss parameters (e.g., regularization in ELSA, negative sampling rate).

- Catastrophic Forgetting: Risk of the LLM losing some of its general semantic understanding while specializing on interaction patterns. Balancing techniques might be needed.

- Evaluation: Designing evaluation protocols that fairly assess both semantic understanding and interaction prediction performance.

Hybrid Embeddings in Practice: The Shaped Approach

Platforms like Shaped are designed to incorporate such cutting-edge techniques. With the addition of beeFormer, users can leverage this hybrid approach directly:

In this hypothetical configuration:

- We select

beeformeras theembedding_policy. - We specify a

base_transformer_modelto initialize the encoder. - We include parameters relevant to the interaction-based fine-tuning (

factors,regularization) and the training process (learning_rate,negative_samples).

Shaped manages the complex training loop, scalability techniques (like those in beeFormer), and deployment, allowing users to benefit from these sophisticated hybrid embeddings without building the intricate pipeline from scratch.

The Future: Smarter Embeddings, Better Recommendations

The fusion of large language models and user interaction data is one of the most promising frontiers in recommendation systems. Research is actively exploring:

- Integrating More Sophisticated Interaction Models: Using more complex CF models than ELSA as the "decoder/supervisor."

- Multi-Modal Models: Extending this approach to combine text, images, and interaction data for richer embeddings.

- Larger Base Models: Leveraging ever-larger and more capable foundation models.

- Continual Learning: Efficiently updating models as new interactions and items arrive.

- Cross-Domain Fine-tuning: Systematically training models across diverse datasets to build truly universal recommendation encoders.

Conclusion: The Best of Both Worlds

By fine-tuning pre-trained language models on user interaction data, techniques like beeFormer are creating a new class of recommendation embeddings – ones that understand both the meaning of content and the patterns of user behavior. This hybrid approach overcomes key limitations of using either semantic or collaborative signals in isolation, leading to improved performance, better cold-start handling, and the exciting potential for more universal, transferable recommendation models. As platforms like Shaped adopt these methods, the power of combining semantic knowledge with behavioral insights becomes increasingly accessible, driving the next wave of personalized experiences.

Further Reading / References

- Vančura, V., Kordík, P., & Straka, M. (2024). beeFormer: Bridging the Gap Between Semantic and Interaction Similarity in Recommender Systems. RecSys '24 / arXiv:2409.10309. (The beeFormer paper)

- Reimers, N., & Gurevych, I. (2019). Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. EMNLP. (Foundation for Sentence Transformers)

- Vančura, V., et al. (2022). Scalable Linear Shallow Autoencoder for Collaborative Filtering. RecSys '22. (ELSA paper)

- Steck, H. (2019). Embarrassingly shallow autoencoders for sparse data. WWW '19. (EASE paper)

Ready to enhance your recommendations by combining semantic understanding with behavioral data?

Request a demo of Shaped today to see how models like beeFormer can improve your results. Or, start exploring immediately with our free trial sandbox.