Recommendation systems aim to predict what users will like. One of the most successful and influential approaches, especially in the era before deep learning dominance, falls under the umbrella of Collaborative Filtering (CF). The core idea of CF is simple: leverage the collective behavior of users ("wisdom of the crowd") to make predictions. If users who liked item A also liked item B, then another user who likes item A might also like item B.

Early CF methods often relied on finding similar users or items (neighborhood methods). However, these can struggle with very large and sparse datasets, where most users have interacted with only a tiny fraction of available items. This is where Matrix Factorization (MF) techniques revolutionized the field, notably gaining prominence during the Netflix Prize competition.

MF models tackle the sparsity problem by learning latent factors (hidden features) for both users and items from the interaction data. These factors represent underlying characteristics that explain user preferences and item attributes.

This post provides a deep dive into Matrix Factorization:

- The core concept of decomposing the user-item interaction matrix.

- How MF models learn latent factors (Optimization).

- Popular algorithms: Stochastic Gradient Descent (SGD) and Alternating Least Squares (ALS).

- Handling explicit vs. implicit feedback.

- Incorporating biases.

- Advantages, limitations, and its place in modern RecSys.

The Core Idea: Decomposing the Interaction Matrix

Imagine a large matrix R where rows represent users and columns represent items. The entry R_ui contains the interaction value between user u and item i.

- Explicit Feedback:

R_uicould be a user's rating (e.g., 1-5 stars).

- Implicit Feedback:

R_uicould be binary (1 if interacted, 0 otherwise) or represent interaction frequency (e.g., number of views, purchase count).

This matrix R is typically very sparse, meaning most entries are unknown or zero.

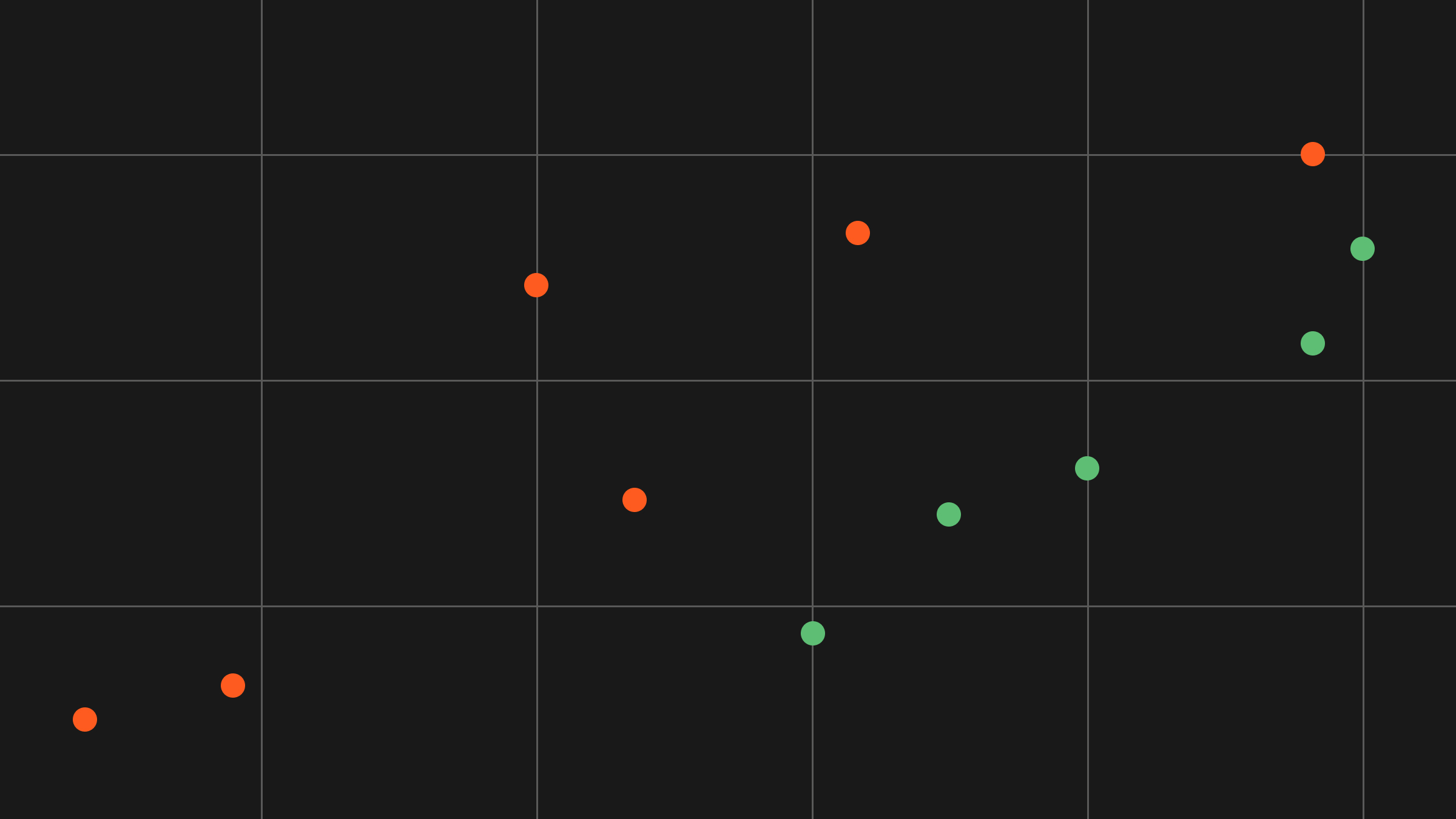

Matrix Factorization aims to find two lower-dimensional matrices, P (user factors) and Q (item factors), such that their product approximates the original interaction matrix R:

R ≈ P × Qᵀ

- P is a matrix where each row p_u is a vector of latent factors for user u (size: num_users × k).

- Q is a matrix where each row q_i is a vector of latent factors for item i (size: num_items × k).

- k is the number of latent factors (dimensionality), a hyperparameter typically much smaller than the number of users or items.

The predicted interaction score (e.g., rating) r̂_ui for user u and item i is simply the dot product of their latent factor vectors:

r̂_ui = p_u ⋅ q_i = ∑_{f=1}^{k} P_{uf} Q_{if}

(The diagram showing a large sparse matrix R being approximated by the product of two smaller, dense matrices P and Qᵀ).

What are Latent Factors? These factors are not predefined; they are learned from the data. They might capture underlying dimensions like genres, user age appeal, item complexity, user adventurousness, etc., but often in a way that's not directly interpretable by humans. The key is that users with similar latent factors tend to like items with similar latent factors.

How Matrix Factorization Learns: Optimization

The goal is to find the user factor matrix P and item factor matrix Q that minimize the difference between the predicted scores (p_u ⋅ q_i) and the actual observed interaction values (R_ui) in the training data.

A common objective function (especially for explicit feedback) is the regularized squared error:

minimize_{P, Q} ∑_{(u,i) ∈ K} (R_{ui} - p_u ⋅ q_i)² + λ (||P||² + ||Q||²)

- The first term sums the squared error over all known user-item interactions (u, i) in the training set K.

- The second term is a regularization term (usually L2 regularization) weighted by λ. This prevents the factors from becoming too large, which helps avoid overfitting, especially given the sparsity of

R. ||P||²and||Q||²are the squared Frobenius norms of the matrices.

Two main algorithmic approaches are used to solve this optimization problem:

- Stochastic Gradient Descent (SGD)

- Alternating Least Squares (ALS)

Common MF Algorithms: SGD and ALS

Stochastic Gradient Descent (SGD)

SGD is a general and versatile optimization technique applicable to many machine learning models, including MF.

- Process:

- Initialize P and Q with small random values.

- Iterate through all known interactions

(u, i)in the training set K multiple times (epochs). - For each interaction

(u, i):- Calculate the prediction error:

e_ui = R_{ui} - p_u ⋅ q_i - Update the user factor vector p_u and item factor vector

q_iby moving them slightly in the direction that reduces the error, considering regularization:p_u ← p_u + γ (e_ui * q_i - λ * p_u)q_i ← q_i + γ (e_ui * p_u - λ * q_i)

- γ is the learning rate.

- Calculate the prediction error:

- Pros: Easy to implement, can handle various loss functions, often faster convergence per iteration on very large datasets if implemented carefully.

- Cons: Can be sensitive to learning rate, convergence can be slow, harder to parallelize effectively compared to ALS.

Alternating Least Squares (ALS)

ALS takes advantage of the fact that if you fix one of the matrices (P or Q), the objective function becomes quadratic in the other, which can be solved optimally using least squares regression.

- Process:

- Initialize P and Q (e.g., randomly).

- Repeat until convergence:

- Fix Q, solve for P: For each user u, find the

p_uthat minimizes the error for all items i rated by that user, holding allq_iconstant. This is a standard least-squares problem and can be solved efficiently. - Fix P, solve for Q: For each item i, find the

q_ithat minimizes the error for all users u who rated that item, holding all p_u constant. This is also a least-squares problem.

- Fix Q, solve for P: For each user u, find the

- Pros: Highly parallelizable (updates for different users/items within a step are independent), often converges faster in terms of wall-clock time on multi-core systems, particularly well-suited for implicit feedback.

- Cons: Typically limited to squared error loss, each iteration can be computationally more expensive than an SGD pass if not parallelized.

Handling Implicit Feedback (W-ALS)

MF, especially ALS, is very effective for implicit feedback datasets (clicks, views, purchases) where we mostly observe positive interactions (user interacted with item) and have a massive number of unobserved interactions (which are not confirmed negative feedback).

The key ideas for adapting MF (often called Weighted ALS or W-ALS) for implicit feedback are:

- Treat all user-item pairs: Consider all pairs

(u, i), including unobserved ones, as part of the training data. - Define Preference

P_ui: SetP_ui= 1 if user u interacted with item i(R_ui > 0), andP_ui= 0 otherwise. - Define Confidence C_ui: Introduce a confidence level for each observation. We are more confident in positive interactions than in unobserved ones (which might just mean the user hasn't seen the item yet). A common formulation is:

C_ui = 1 + α * R_ui- α is a hyperparameter controlling the baseline confidence for positive interactions (often set around 40). Observed interactions

(R_ui > 0)get higher confidence, while unobserved(R_ui = 0)get a baseline confidence of 1.

- Weighted Objective Function: Minimize a weighted squared error:

minimize_{P, Q} ∑_{u,i} C_{ui} (P_{ui} - p_u ⋅ q_i)² + λ (||P||² + ||Q||²)

ALS is particularly well-suited for this weighted formulation because the least-squares steps can efficiently incorporate the C_ui weights.

Adding Biases

The basic MF model r̂_ui = p_u ⋅ q_i can be improved by accounting for user and item biases – tendencies for some users to give higher ratings than others, or for some items to receive higher ratings regardless of the user.

The prediction formula becomes:

r̂_ui = μ + b_u + b_i + p_u ⋅ q_i

- μ: Global average rating/interaction value.

- b_u: User bias term for user u.

- b_i: Item bias term for item i.

These bias terms are learned alongside the latent factors p_u and q_i during optimization (either SGD or ALS). This often leads to significantly better accuracy by capturing baseline effects.

Advantages of Matrix Factorization

- ✅ Captures Latent Structure: Effectively models hidden preferences and item characteristics driving interactions.

- ✅ Handles Sparsity: Works reasonably well even when the interaction matrix is very sparse.

- ✅ Scalability: Computationally more efficient than traditional neighborhood-based CF, especially ALS which parallelizes well.

- ✅ Good Performance: Often provides strong baseline performance and was state-of-the-art for some time.

- ✅ Foundation for More Complex Models: Many advanced techniques build upon or incorporate MF principles.

Limitations of Matrix Factorization

- ❌ Cold-Start Problem: Cannot easily recommend items to new users or recommend new items to any user, as they lack interaction history to learn factors from (requires workarounds like using content features).

- ❌ Feature Representation: Difficulty incorporating side information (user demographics, item descriptions/genres) directly into the factorization process itself (though extensions exist).

- ❌ Linearity in Latent Space: The dot product captures linear relationships between latent factors. More complex, non-linear interactions might be missed (addressed by deep learning models).

MF vs. Other Recommendation Techniques

- Neighborhood Methods (User/Item KNN): MF often scales better and can provide better accuracy by capturing more global patterns than local neighborhoods.

- Deep Learning Models (Two-Tower, NCF, etc.): Deep learning models offer more flexibility in architecture, can model non-linear interactions, and easily incorporate diverse side features end-to-end. However, they are generally more complex to implement and train. MF is often used as a baseline to compare against.

- Content-Based Filtering: MF is purely collaborative (uses only interaction data), while content-based uses item features. Hybrid models often combine both techniques.

Applications and Legacy

Matrix Factorization has been a cornerstone of recommendation systems for over a decade.

- Product Recommendations: Used widely in e-commerce.

- Movie/Music Recommendations: Foundational in streaming services.

- Baseline Model: Still serves as a strong baseline for evaluating newer algorithms.

- Components in Hybrid Systems: MF features or embeddings are sometimes used as inputs to more complex models.

Its impact, particularly highlighted by the Netflix Prize, solidified latent factor models as a primary tool in the RecSys toolkit.

Matrix Factorization in Practice: An Example with Shaped

Modern recommendation platforms like Shaped allow you to easily configure and deploy various models, including Matrix Factorization techniques like ALS and SVD. These models can be used for different parts of the recommendation pipeline, such as generating candidate item embeddings (embedding policy) or directly scoring items (scoring policy).

Here's a simplified example of how you might configure ALS as an embedding policy within Shaped:

In this configuration:

- We define a model named my-mf-model.

- The embedding_policy is set to use als.

- We specify the number of factors (latent dimensions) to learn as 64.

- We set the regularization strength.

- We enable bm25 weighting, which is common for implicit feedback scenarios handled well by ALS.

- The scoring_policy is set to auto-tune, letting Shaped optimize the ranking stage, which might involve a different model type altogether. Alternatively, you could set policy_type: als here too if you wanted to use ALS for both embedding and scoring.

This declarative approach allows practitioners to leverage powerful algorithms like Matrix Factorization without needing to implement the underlying optimization routines from scratch.

Conclusion: A Foundational RecSys Technique

Matrix Factorization provides an elegant and powerful way to perform collaborative filtering by uncovering latent dimensions of user preferences and item characteristics from sparse interaction data. Algorithms like SGD and especially ALS (with its weighted variant for implicit feedback) offer practical ways to learn these factors.

While deep learning has introduced more powerful and flexible models, understanding MF is crucial. It represents a fundamental concept in recommendation systems, offers strong performance in many scenarios, and its principles continue to influence the design of more advanced techniques. It remains an essential part of the history and practice of building effective recommendation systems.

Ready to build powerful recommendations with Matrix Factorization and other state-of-the-art models?

Request a demo of Shaped today to see it in action with your specific use case. Or, start exploring immediately with our free trial sandbox.