Every real-time dashboard, machine learning model, and personalized user experience depends on one foundational layer: data ingestion. It’s the first step in any modern data pipeline, responsible for collecting, validating, and delivering data from source systems into downstream platforms where it can be analyzed, modeled, or acted upon.

Despite its importance, ingestion is often where data quality, reliability, and scalability problems begin. Delayed records, schema mismatches, dropped events, and brittle connectors can quietly break entire analytics stacks, especially as systems grow more complex and distributed.

Whether you're building a real-time recommendation engine or syncing data across microservices, getting ingestion right is critical.

We’ll walk through ten best practices to help you design ingestion systems that are fast, fault-tolerant, and ready to scale. From schema enforcement to error handling and observability, these principles are designed to reduce operational pain and future-proof your data infrastructure.

1. Choose the Right Ingestion Pattern for Your Use Case

Not all ingestion pipelines are built the same, nor should they be. The first decision you’ll need to make is whether to use batch, streaming, or a hybrid approach, depending on the latency, volume, and reliability requirements of your system.

Batch Ingestion

Ideal for use cases where data can be collected and delivered at scheduled intervals. Think of nightly ETL jobs that pull records from SaaS tools or legacy databases into a warehouse.

- Common tools: Airflow, dbt, Snowflake (with Snowpipe), Fivetran

- Pros: Simple to manage, easier to debug, lower compute costs for large datasets

- Cons: Limited in time-sensitive scenarios; slower to reflect new data

Streaming Ingestion

Best suited for real-time applications that require immediate access to fresh data—like personalization engines, alerting systems, or fraud detection.

- Common tools: Apache Kafka, Apache Pulsar, AWS Kinesis, Apache Flink

- Pros: Low latency, continuous processing, event-driven architecture support

- Cons: More operational complexity, requires careful handling of ordering, retries, and backpressure

Hybrid Approach

Most modern data architectures use a mix of both. For example, product events might flow in via Kafka while nightly CRM updates arrive in batches.

Start by understanding the nature of your data and its downstream dependencies. Then choose an ingestion pattern that aligns with how fast you need data to flow and how often it changes.

2. Define and Enforce Data Contracts Early

One of the most common causes of broken pipelines is schema drift, when upstream producers change the shape of data without warning, breaking downstream consumers. The fix? Treat your data interfaces like APIs and formalize them with data contracts.

A data contract defines the expected structure, types, and semantics of ingested data. It can include:

- Required and optional fields

- Data types (e.g. string, integer, timestamp)

- Validation rules (e.g. non-null constraints, enum values)

- Expectations for units, formats, and identifiers

To enforce contracts:

- Use schema definitions (like Avro, Protobuf, or JSON Schema) to validate incoming data at the point of ingestion

- Apply automatic validation checks within streaming pipelines or ingestion gateways

- Break builds or alert when incompatible schema changes are introduced

This practice shifts ingestion from “best-effort parsing” to “explicitly defined expectations,” reducing the risk of downstream failure and improving confidence across data teams.

Bonus: Strong contracts make it easier to onboard new producers and automate schema evolution with tools like Confluent Schema Registry, Dataplex, or custom schema validation layers.

3. Prioritize Idempotency and Deduplication

In distributed systems, duplicate events are inevitable. Network retries, service restarts, and inconsistent producer behavior can all result in the same data being ingested multiple times. If your ingestion pipeline isn’t designed to handle this, you risk skewed metrics, double-counted transactions, and corrupted downstream models.

To prevent this, your ingestion system should be idempotent; processing the same event more than once without changing the final outcome. That requires implementing robust deduplication logic as early in the pipeline as possible.

Here are a few proven strategies:

- Use unique event IDs: Every ingested event should include a globally unique identifier (UUID or ULID). Deduplication can then be handled via keyed storage, caches, or lookup tables that discard previously seen IDs.

- Implement window-based deduplication for streams: In streaming pipelines (e.g. Kafka + Flink), use event-time windows and watermarking to group and deduplicate near-duplicate events. This is especially useful for handling late-arriving data.

- Hash-based deduplication: Generate a hash of the event payload and use it to check for duplicates in memory or temporary storage. While less precise than ID-based methods, it’s helpful when upstream systems don’t assign event IDs.

Idempotency ensures your ingestion logic is repeatable, resilient, and accurate, even under failure conditions or scale surges.

4. Instrument Robust Error Handling and Dead Letter Queues

No matter how well you design your pipeline, bad data will eventually show up. Whether it’s a malformed payload, a missing field, or an unexpected schema change, your ingestion system should fail gracefully, not silently or catastrophically.

To make that possible, implement structured error handling and dead letter queues (DLQs).

Dead Letter Queues

A DLQ is a secondary destination where failed events are routed for later inspection and reprocessing. Rather than discarding problematic records, you preserve them along with metadata such as:

- Timestamp of failure

- Source system or partition

- Error type or parsing stack trace

This makes it easier to triage issues, recover lost data, and debug anomalies without interrupting the main data flow.

Best practices for error handling:

- Tag records with failure codes or validation statuses

- Set thresholds for retries, then escalate to the DLQ

- Separate transient errors (e.g., network failures) from structural ones (e.g., missing fields)

- Monitor DLQ volumes as a signal of upstream quality or system health

Well-instrumented pipelines expose when and why things go wrong. This visibility helps teams move faster, resolve issues proactively, and build more resilient ingestion architectures.

5. Normalize Timestamps and Time Zones at Ingestion

Inconsistent timestamps are one of the most common sources of confusion in data pipelines, especially when data flows in from multiple sources, devices, or regions.

To avoid downstream ambiguity, normalize all time-related fields as early as possible in the ingestion process.

Best practices:

- Store all timestamps in UTC to maintain a single source of temporal truth.

- Capture both event time and ingestion time, especially for streaming pipelines. This allows you to measure processing delays and support event-time windowing in tools like Apache Flink or Kafka Streams.

- Use a consistent format, such as ISO 8601, to simplify parsing and debugging.

- If source data includes ambiguous local time, enrich it with explicit time zone context before normalization.

Proper timestamp handling ensures that downstream systems can safely aggregate, join, and replay data without inconsistencies. It’s a small upfront cost that saves hours of troubleshooting later.

6. Design for Backpressure and Throughput Variability

Ingestion systems need to handle more than steady-state workloads; they must survive bursts, traffic spikes, and upstream anomalies without dropping data or degrading performance.

This requires designing for backpressure, the condition where your system temporarily can’t process incoming data as fast as it’s arriving.

How to handle it:

- Buffer with queues: Use durable message brokers like Kafka, RabbitMQ, or SQS to decouple producers from consumers.

- Enable autoscaling: Use container orchestration tools (like Kubernetes) to scale ingestion workers based on queue depth or throughput metrics.

- Partition for parallelism: Distribute load across partitions or shards to improve concurrency and reduce latency.

- Apply rate limiting and retries: Control input rates from upstream systems and avoid overloading downstream dependencies.

Monitoring throughput trends and failure modes under pressure is just as important as measuring baseline performance. A well-architected ingestion layer should absorb volatility rather than amplify it.

7. Implement Observability Across the Ingestion Pipeline

You can’t fix what you can’t see. Observability is essential for maintaining data quality, performance, and reliability in complex ingestion systems. Without clear visibility into how data is flowing (or failing), teams are flying blind.

What to monitor:

- Ingestion throughput: Events per second, per source or topic

- Lag and latency: Time between event occurrence and availability downstream

- Error rates: Percentage of failed records, categorized by type (schema mismatch, missing fields, etc.)

- Dropped or retried events: Volume and cause of retries, dead letters, or discarded records

Tooling and instrumentation:

- Use logging frameworks (e.g., structured logs via Fluent Bit or Logstash) to track event-level details

- Apply distributed tracing (e.g., OpenTelemetry) to trace data flow across microservices

- Monitor with Prometheus, Grafana, or vendor-specific dashboards (e.g., Datadog, New Relic)

- Set alerts on abnormal drops, delays, or backlog growth

Observability transforms ingestion from a black box into a transparent, measurable system. It shortens time to resolution, prevents silent failures, and builds confidence across data, engineering, and product teams.

8. Plan for Schema Evolution and Versioning

Data is never static. Fields get added, formats change, and new use cases emerge. If your ingestion pipeline isn’t designed to accommodate evolving schemas, even small changes can cause major disruptions downstream.

Why schema evolution matters:

- Producers may update fields, change data types, or restructure payloads

- Consumers may expect different versions of the same dataset

- Backward and forward compatibility is essential for replayability, audits, and historical analysis

Best practices:

- Use versioned schemas with tools like Avro, Protobuf, or JSON Schema, and store them in a central registry (e.g., Confluent Schema Registry or Apicurio)

- Support compatibility modes: Prefer backward-compatible changes (e.g., adding optional fields) over breaking changes (e.g., removing required fields)

- Validate at ingestion: Apply schema validation before data reaches your core systems to prevent bad records from propagating

- Track schema versions in metadata: Attach a version identifier to each ingested record so downstream systems can parse appropriately

Schema evolution is inevitable. Planning for it upfront allows your ingestion system to grow and adapt without creating instability for consumers.

9. Secure Data at the Edge and In Transit

Data ingestion is often the first point where sensitive information enters your system. Without proper controls in place, this stage can become a major vulnerability, from exposed personally identifiable information (PII) to insecure transport channels.

Key security practices:

- Encrypt data in transit: Use TLS for all inbound connections, whether ingesting from APIs, message brokers, or event streams. Never send raw payloads over unsecured channels.

- Apply access controls and authentication: Limit who or what can publish to ingestion endpoints. Use API keys, OAuth tokens, IAM roles, or signed messages to verify trusted sources.

- Mask or tokenize PII at the edge: If user data includes names, emails, or identifiers, apply redaction, hashing, or tokenization before storing or processing further downstream.

- Implement audit logging: Record who sent what data and when. Include metadata for traceability, especially in regulated environments like finance, healthcare, or education.

- Restrict scope of access: Producers should only publish to specific topics, streams, or endpoints. Isolate ingestion components from broader data systems where possible.

Security at the ingestion layer is about establishing trust, preventing leaks, and minimizing the blast radius of failures or misconfigurations.

10. Test with Realistic Loads and Edge Cases

Production data rarely behaves like your staging environment. Late events, malformed payloads, duplicate records, and unexpected spikes are common—and if your ingestion pipeline can’t handle them, it will break when you least expect it.

How to test effectively:

- Replay production traffic (anonymized) to simulate real-world load, event structure, and timing irregularities

- Inject failure scenarios, such as:

- Events missing required fields

- Out-of-order or delayed messages

- Payloads exceeding size limits

- Unrecognized schema versions

- Stress test ingestion throughput with synthetic load generators to evaluate autoscaling, latency, and backpressure handling

- Establish validation pipelines to compare input vs. output records and ensure completeness and fidelity

- Automate ingestion testing in CI to catch regressions early. Include schema validations, format checks, and deduplication tests as part of every build.

Testing ingestion is about variability. The more edge cases you handle upfront, the more stable and predictable your system will be in production.

Build Ingestion Systems That Scale With Confidence

Data ingestion sits at the foundation of every modern data pipeline. Whether you're supporting real-time personalization, analytics, or machine learning, ingestion is where reliability, consistency, and trust begin.

Thoughtful ingestion design makes it easier to evolve your data systems without sacrificing quality or velocity.

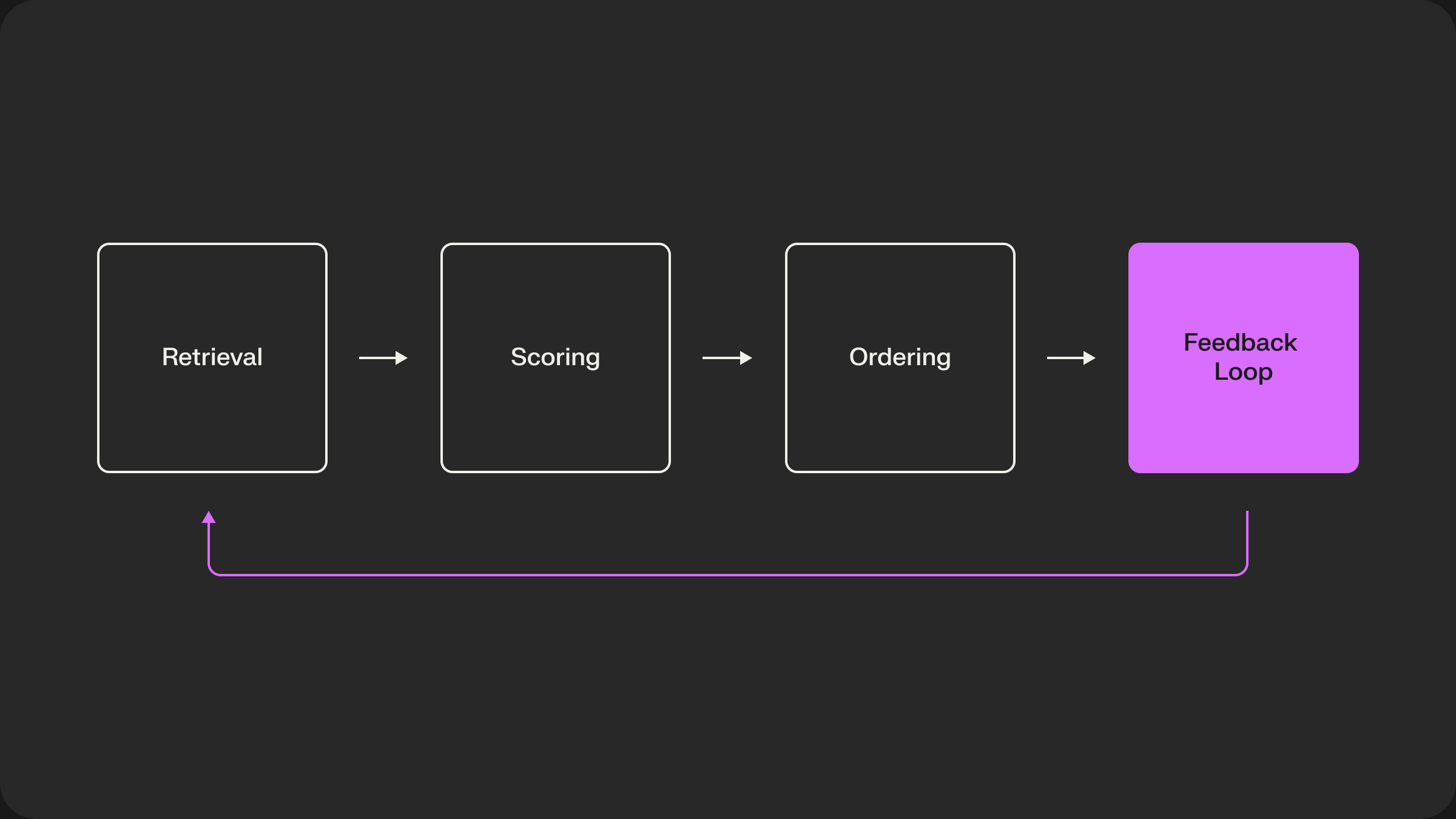

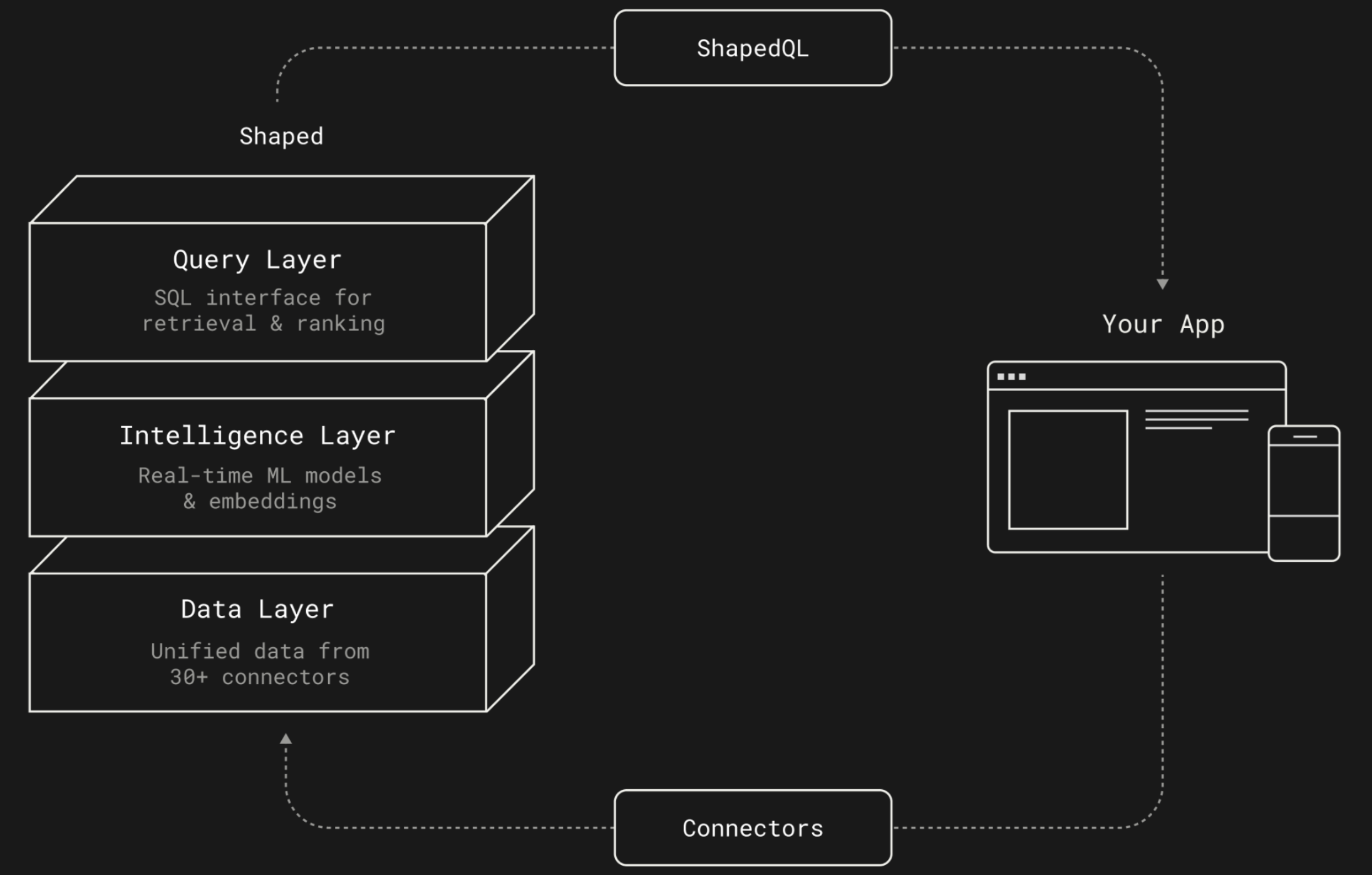

The strongest data platforms aren’t just fast; they’re built to adapt, recover, and scale under pressure. Shaped makes it easy to sync users, items, and events in real time, giving your team a clean foundation for building personalized experiences without managing ingestion infrastructure from scratch.

Ready to build smarter pipelines without the heavy lift? Start your free trial today.