Personalization is the name of the game in today's digital world. Recommendation systems are key players, helping us discover relevant products, articles, movies, and more. While Collaborative Filtering leverages the "wisdom of the crowd," another fundamental approach works differently: Content-Based Filtering (CBF).

Instead of looking at what similar users liked, Content-Based Filtering focuses directly on you and the content of the items you've interacted with positively in the past. The core idea is simple yet powerful: "If you liked that, you might also like this because they share similar characteristics."

This post dives deep into Content-Based Filtering:

- The fundamental principles and how it works.

- Its history and evolution from simple keywords to sophisticated embeddings.

- The challenges involved in building CBF systems from scratch.

- How modern AI (Language and Vision models) revolutionizes content understanding.

- How platforms like Shaped implement different flavors of content-based recommendations.

Let's explore the world of recommending based on content similarity.

What is Content-Based Filtering? The Core Idea

At its heart, Content-Based Filtering operates on two key components:

- Item Representation: Each item is described by a set of features or attributes. These could be:

- Textual: Keywords, descriptions, categories, tags, genres.

- Structured: Author, director, brand, price, year.

- Visual: Images, video thumbnails.

- Audio: Sound features, music genre metadata.

- User Profile: A profile is built for each user, summarizing their preferences based on the features of items they have liked or interacted positively with previously.

The recommendation process generally follows these steps:

- Analyze Content: Extract relevant features from items the user has liked.

- Build Profile: Create or update the user's profile based on these features (e.g., a weighted vector of features).

- Match Content: Compare the user's profile to the feature representations of other items not yet seen by the user.

- Recommend: Suggest items whose features closely match the user's profile, typically using a similarity metric.

(Imagine a diagram: User interacts with Item A (features: Sci-Fi, Space). User Profile now reflects interest in Sci-Fi/Space. System compares profile to Item B (features: Sci-Fi, Robots) and Item C (features: Romance, History). Item B is deemed more similar and recommended.)

The Journey of Content-Based Filtering: From Keywords to Semantics

CBF has been around since the early days of information retrieval and recommendation systems.

- Early Days (Keywords & TF-IDF): The earliest forms relied heavily on textual features. Techniques like TF-IDF (Term Frequency-Inverse Document Frequency) were used to represent items (e.g., articles, documents) as vectors of keyword weights. User profiles were similarly vectors summarizing the important keywords from liked items. Similarity was often calculated using Cosine Similarity.

- Challenge: This approach struggled with synonyms (e.g., "film" vs. "movie"), polysemy (words with multiple meanings), and understanding deeper semantic relationships. It couldn't easily handle non-textual features.

- Vector Space Models & Feature Engineering: The concept expanded to incorporate more structured features (like genre, actors, brand). This required significant feature engineering – manually defining how to represent different types of content and how to combine them into a unified item representation and user profile.

- Challenge: Feature engineering is labor-intensive, domain-specific, and brittle. Combining heterogeneous features (text, categories, numerical values) into a meaningful similarity score is complex.

- The Need for Deeper Understanding: As content became richer (images, complex descriptions) and user expectations grew, the limitations of simple feature matching became apparent. The need arose for models that could understand the meaning behind the content, not just surface-level keywords.

How Content-Based Filtering Works: Key Steps & Components

Let's break down the traditional process:

- Item Representation / Feature Extraction:

- This is arguably the most critical step. You need to transform raw item content into a structured format suitable for comparison.

- Text: Clean text, tokenize, remove stop words, apply TF-IDF, or use more advanced techniques like Bag-of-Words.

- Categorical: Use one-hot encoding or, more commonly now, learn embeddings for categories/tags.

- Numerical: Normalize values.

- Output: Typically, an item profile vector v_i for each item i.

- User Profile Building:

- Aggregate the feature vectors of items the user u has positively interacted with.

- Simple Approach: Average the item vectors v_i liked by the user.

- Weighted Approach: Give more weight to highly-rated items or more recent interactions.

- Output: A user profile vector p_u.

- Similarity Calculation:

- Measure the similarity between the user profile vector p_u and the vector v_j for each candidate item j.

- Common Metric: Cosine Similarity similarity(p_u, v_j) = (p_u ⋅ v_j) / (||p_u|| ||v_j||). It measures the angle between vectors, capturing orientation rather than magnitude.

- Other metrics like Dot Product or Euclidean Distance can also be used depending on the vector representation.

- Recommendation Generation:

- Rank candidate items j based on their similarity score to the user profile p_u.

- Present the top-N most similar items as recommendations.

The Rise of Deep Learning: Understanding Content Better

Deep learning has significantly enhanced CBF by providing much richer ways to represent content:

- Embeddings Rule: Instead of sparse TF-IDF vectors or manually engineered features, deep learning models learn dense embeddings – low-dimensional vectors that capture semantic meaning. Items with similar meanings (even if using different words) will have embeddings that are close together in the vector space.

- Language Models (LLMs): Models like Word2Vec, GloVe, and especially large pre-trained Transformers (BERT, Sentence-BERT, RoBERTa, etc.) can process item titles, descriptions, reviews, and tags to generate powerful text embeddings. They understand context, synonyms, and nuances far better than older methods. This bridges the semantic gap.

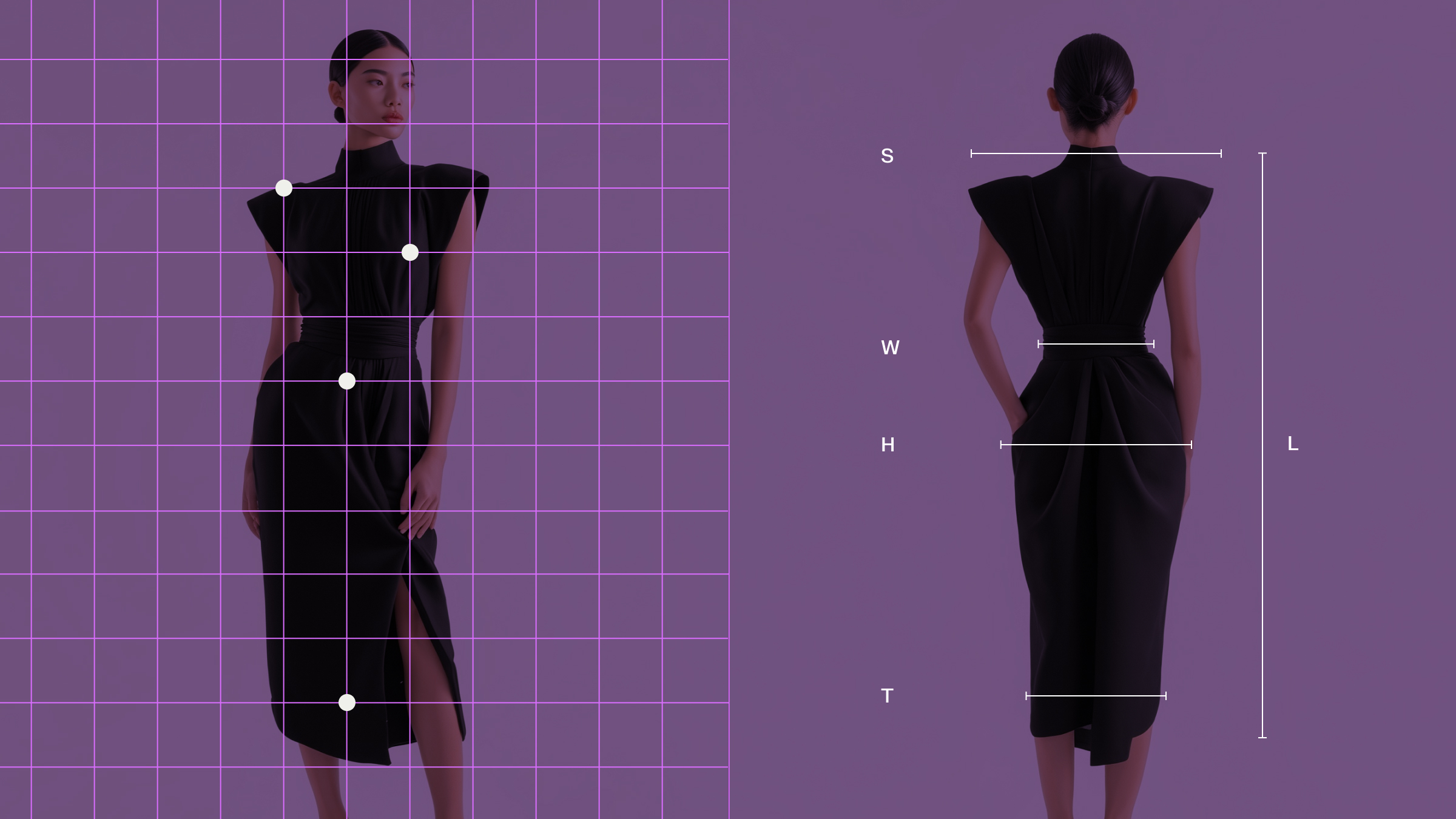

- Vision Models: Convolutional Neural Networks (CNNs like ResNet, EfficientNet) and Vision Transformers (ViT) can process item images to generate visual embeddings. This allows recommending visually similar products (e.g., fashion items, furniture).

- Multimodal Models: Models like CLIP learn joint embeddings for images and text, allowing recommendations based on cross-modal similarity (e.g., finding products matching a textual description or finding text descriptions matching an image).

These advanced embeddings can be used directly as the item representations (v_i) in the CBF pipeline, leading to more accurate and nuanced recommendations.

Building Content-Based Filtering From Scratch: The Challenges

While the concept is straightforward, building a robust CBF system isn't trivial:

- Feature Engineering/Extraction: Still a challenge, even with deep learning. Choosing the right pre-trained models, fine-tuning them, and deciding which features (text, image, structured) to use requires expertise.

- Scalability: Calculating similarity between a user profile and millions of item vectors in real-time is computationally expensive. Requires techniques like Approximate Nearest Neighbor (ANN) search on item embeddings.

- User Profile Dynamics: How quickly should profiles adapt to new interests? How much weight should be given to older vs. newer interactions? Keeping profiles fresh and relevant is complex.

- Overspecialization (Filter Bubble): CBF tends to recommend items very similar to past interactions. This can limit discovery and serendipity, trapping users in a narrow range of content. It doesn't inherently introduce novelty from outside the user's established taste profile.

- Content Quality Dependence: The quality of recommendations heavily depends on the quality and richness of the item content/metadata available. Poor descriptions lead to poor recommendations.

- User Cold Start: While CBF handles item cold start well (as long as the new item has content features), it still requires some user interaction history to build an initial user profile.

Content-Based Filtering in Practice: The Shaped Approach

Modern platforms like Shaped abstract away many of the implementation complexities and offer flexible ways to leverage content similarity. Shaped supports several policy_types under its embedding_policy and scoring_policy configurations that implement different flavors of content-based logic:

- item-content-similarity:

- How it works: This policy closely follows the traditional CBF pattern but uses modern embeddings. It computes item embeddings based on item attributes (e.g., text embeddings from descriptions, categorical embeddings from tags). The user embedding (profile) is then computed by pooling the embeddings of items the user has interacted with.

- Use Case: Classic CBF - recommend items similar to those the user previously engaged with.

- Configuration Example (Scoring):

- user-content-similarity:

- How it works: This flips the perspective slightly. It computes user embeddings based on user attributes. Item embeddings are then derived by pooling the embeddings of users who interacted with that item.

- Use Case: Useful when user attributes are rich and you want to find items liked by users with similar attributes.

- Configuration Example (Scoring):

- user-item-content-similarity:

- How it works: This policy performs a direct comparison between user attributes and item attributes. It computes user embeddings from user features and item embeddings from item features independently. Similarity is then calculated directly between these embeddings.

- Use Case: Powerful when user and item attributes exist in an aligned context (e.g., user 'interests' attribute vs. item 'tags' attribute). It doesn't rely on past interaction history for the similarity calculation itself, only on the inherent attributes.

- Configuration Example (Scoring):

These policies allow you to leverage content in sophisticated ways, often using powerful pre-trained or fine-tuned embedding models managed by the platform, without needing to build the feature extraction and similarity computation pipelines yourself.

Advantages and Disadvantages of Content-Based Filtering

Advantages:

- ✅ Handles Item Cold Start: Can recommend new items immediately if they have content features.

- ✅ User Independence: Recommendations for one user don't depend on other users' data.

- ✅ Interpretability: Recommendations can often be explained based on item features (e.g., "Recommended because you liked items with genre X").

- ✅ No Popularity Bias: Doesn't inherently favor popular items; recommendations are based purely on feature similarity.

Disadvantages:

- ❌ Feature Engineering/Representation: Quality heavily depends on available content features and the methods used to represent them.

- ❌ Overspecialization: Can lead to narrow recommendations and limited discovery ("filter bubble").

- ❌ User Cold Start: Still requires some initial user interactions to build a meaningful profile for policies like item-content-similarity.

- ❌ Doesn't Leverage Collaborative Information: Misses out on predicting preference based on what similar users like, which can often capture subtle preferences not obvious from content alone.

Conclusion: A Valuable Tool in the Recommendation Toolkit

Content-Based Filtering is a foundational recommendation technique that leverages the characteristics of items to predict user preference. From its origins in simple keyword matching to modern implementations using sophisticated deep learning embeddings for text and images, it offers a powerful way to personalize experiences based on individual taste profiles derived from content.

While it faces challenges like overspecialization and requires good quality content data, its ability to handle new items and provide interpretable recommendations makes it invaluable. Platforms like Shaped simplify its deployment, offering various content-similarity strategies tailored to different use cases. Often, the most powerful recommendation systems employ hybrid approaches, combining the strengths of Content-Based Filtering with Collaborative Filtering and other techniques to deliver the most relevant and engaging user experiences.

Ready to build smarter recommendations using the content your users love?

Request a demo of Shaped today to see how our content-based policies work with your specific use case. Or, start exploring immediately with our free trial sandbox.