When does this matter? Not every application needs to solve this problem. If you're building a simple chat interface over a few PDFs, basic retrieval-augmented generation (RAG) is probably sufficient. But if you're building autonomous agents that make consequential decisions—procurement, legal research, financial analysis—positional bias becomes a reliability bottleneck.

The Hidden Costs of Noisy Context

Consider a corporate procurement agent. A user asks: "Find the most reliable supplier for ergonomic office chairs with at least 50 units in stock under $300/unit."

A standard vector database returns 50 suppliers that mention "ergonomic chairs." You've now introduced three costs:

- Latency: The LLM must process 50 supplier profiles before responding

- Token costs: You're paying for a massive context window, most of which will be ignored

- Accuracy: If the only supplier meeting all criteria is at position #30, the LLM is likely to default to #1 (over budget) or #50 (out of stock) due to positional bias

This isn't a theoretical problem. In production systems, we've seen agents consistently fail on mid-list items even when they're objectively the best match.

The Maturity Gap: From Chat to Agents

Most developers start with naive RAG: retrieve the top-k semantically similar chunks and pass them to the LLM. This works fine for conversational applications where "close enough" is acceptable.

But autonomous agents need more than semantic similarity. They need documents that are:

- Legally compliant

- Actually in stock (or currently valid)

- Historically preferred by the business

- Ranked by multiple criteria simultaneously

Traditional RAG assumes the LLM will filter and rank inside the context window.

Agentic retrieval pushes that intelligence into the database layer, so the LLM only sees pre-validated, pre-ranked results.

Common Workarounds (and Their Tradeoffs)

When simple RAG fails, most teams try one of these approaches:

1. Multi-Stage Pipeline (The Latency Tax)

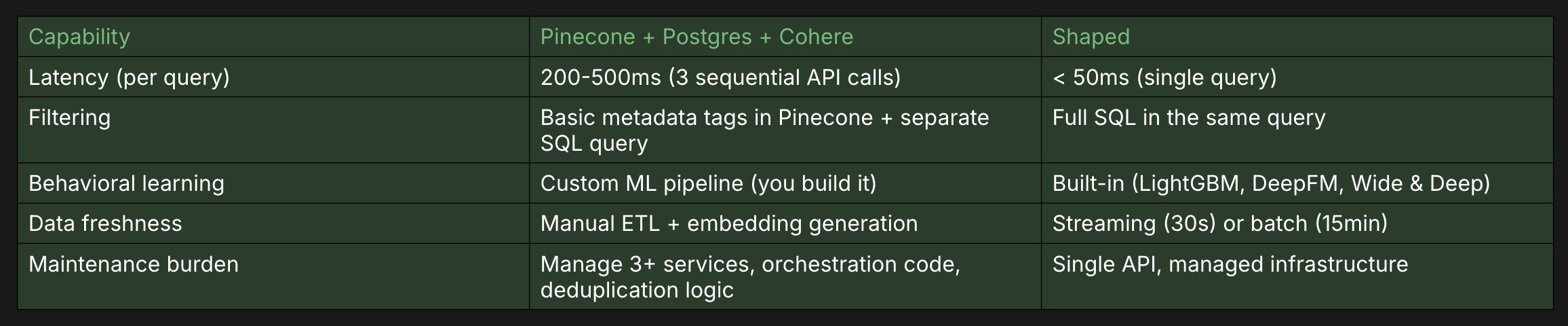

The typical pattern: use Pinecone for vector search, Postgres for metadata, and Cohere for reranking. This

can work, but introduces sequential network hops that compound with every agent decision. If your agent performs 5 reasoning steps per task, you're orchestrating 15+ API calls across 3+ services. Each hop adds 50-200ms of latency. More critically,

you're responsible for the glue code: maintaining connection pooling, handling rate limits, debugging failures across vendor boundaries, and version-managing three separate SDKs.

2. Long Context Brute Force (The Token Tax)

Some teams use long-context models (200k+ tokens) and dump everything in. The problem: you're paying a premium for tokens the model statistically ignores. At scale, this approach can cost 10x more per query than properly pruned retrieval. And you

still hit positional bias—the model defaults to the first or last item regardless of context window size.

3. Custom Search Infrastructure (The Maintenance Tax)

ElasticSearch or OpenSearch give you complete control. You can build hybrid search, tune scoring functions, and optimize for your exact use case. But this requires:

- Dedicated infrastructure engineers to manage clusters

- Ongoing tuning of BM25 weights, vector dimensions, and index settings

- Custom reranking logic (often a separate ML pipeline)

- No native learning from user behavior—you're manually adjusting ranking formulas

This approach works for large engineering teams, but most teams building agents don't have the bandwidth to become search infrastructure experts. You wanted to build an agent, not operate a search cluster.

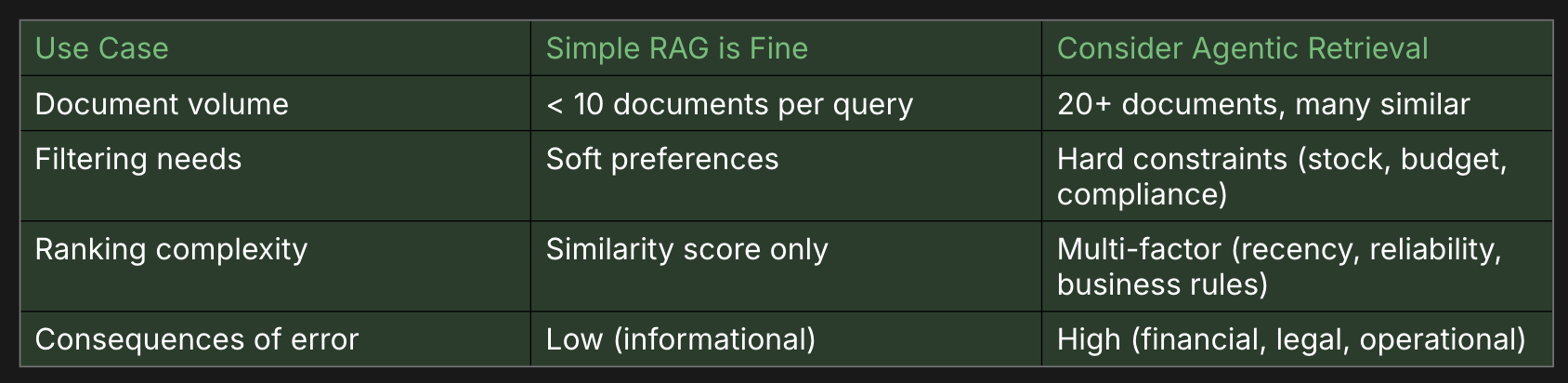

When Do You Need Specialized Retrieval?

Not every project needs to solve this problem. Here's a quick decision framework:

Why Shaped Solves This Better

Shaped was built specifically to solve the agentic retrieval problem as a

unified system. Unlike cobbling together vector DBs, SQL databases, and reranker APIs, Shaped provides filtering, ranking, and pruning in a single query with sub-50ms latency.

Here's what makes it different:

1. Native Multi-Factor Ranking (Not Just Similarity)

Traditional vector DBs return documents sorted by cosine similarity. Shaped lets you combine semantic embeddings with behavioral signals (click-through rates, historical preferences, business rules) in a single scoring function. The

ranking happens at query time, not in a separate reranking step, which eliminates an entire network hop.

2. SQL-Grade Filtering (Built-In, Not Bolted-On)

Most vector DBs offer basic metadata filtering ("tags: electronics"). Shaped gives you full SQL expressiveness in the same query as vector search. You can filter on ranges, nested conditions, or derived fields without maintaining a separate relational database.

In a Pinecone + Postgres setup, this requires two separate queries, a join operation, and custom deduplication logic.

3. Learning From Agent Behavior

Shaped's ranking models (like LightGBM, DeepFM, and Wide & Deep) learn from implicit feedback: which suppliers users actually select, which results get clicked, which documents lead to successful outcomes. This happens automatically—you don't need to build a separate ML pipeline or manually tune scoring weights.

ElasticSearch requires you to implement this learning layer yourself. Pinecone doesn't offer it at all.

4. Real-Time Sync (Streaming or Batch)

Shaped connects directly to your operational databases and supports two modes:

Real-time streaming connectors (Segment, Kafka, Kinesis) push data to Shaped within 30 seconds. When stock levels change or new suppliers are added, your agent sees updates almost immediately.

Batch connectors (BigQuery, Snowflake, Postgres) sync every 15 minutes. Even in batch mode, you're not building ETL pipelines or managing embedding generation infrastructure—Shaped handles incremental replication automatically.

Side-by-Side: Shaped vs. DIY Stack

Implementation Pattern

Here's how you might move from noisy RAG to structured agentic retrieval:

Step 1: Connect Your Data

Shaped provides 20+ native connectors for common data sources. Use streaming connectors (Segment, Kafka, Kinesis) for 30-second updates, or batch connectors (BigQuery, Snowflake, Postgres) for 15-minute syncs. Configure via the console or CLI.

Step 2: Define Ranking Logic

An "Engine" defines how Shaped should rank context. Unlike pure vector similarity, you can combine semantic embeddings with behavioral models that learn from user preferences.

Step 3: Query with Business Rules

ShapedQL lets you combine semantic search with hard filters and ML-driven ranking:

Step 4: Integrate with Your Agent

Connect the ranked results back to your LLM agent. Because results are pre-validated, the agent's reasoning time is significantly reduced:

What You Gain with Shaped

Teams using Shaped for agent retrieval report:

- 4-10x latency reduction: Eliminating multi-hop API calls means agents respond in under 100ms instead of 500ms+

- Zero hallucinations on business rules: SQL-grade filtering makes it structurally impossible to recommend out-of-stock items or violate budget constraints

- 60-90% reduction in token costs: Pruning context from 50 documents to 5 translates directly to lower OpenAI/Anthropic bills

- Weeks of engineering time saved: No glue code to maintain, no search clusters to tune, no reranking pipelines to debug

The Bottom Line

If you're building agents that make real decisions—procurement, legal research, customer support—positional bias is not a theoretical problem. It's a reliability bottleneck that shows up in production as hallucinations, ignored constraints, and user frustration.

You have three options:

- Build it yourself with ElasticSearch/Pinecone (works, but takes months and ongoing maintenance)

- Use a multi-vendor stack (works, but slow and brittle)

- Use Shaped and get filtering + ranking + learning in a single query with sub-50ms latency

Most teams building agents don't want to become search infrastructure experts. They want to ship reliable, fast agents. That's what Shaped was built for.

Ready to see the difference?

Start building with Shaped today. Get $300 in free credits and see how pre-ranked retrieval transforms your agent's accuracy and speed. Visit console.shaped.ai to get started.