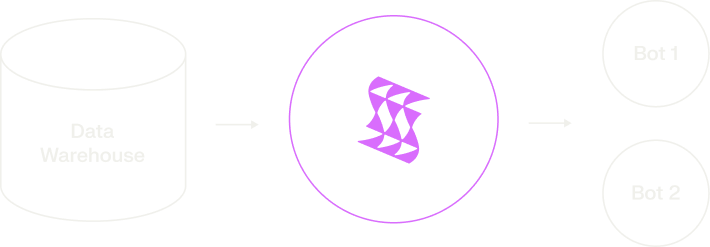

Context-aware retrieval for AI Agents

Stop stuffing context windows. Power your agents with a stateful relevance engine that retrieves chunks based on user history and intent, not just semantic similarity.

Step 1: Connect your knowledge base

Ingest your documents, vector embeddings, and—crucially—user interaction streams into a single unified schema. No complex ETL required.

Step 2: Configure retrieval logic

Stop writing Python glue code to filter chunks. Define complex retrieval strategies—combining vector similarity, keyword matching, and user history—in a single declarative query.

Step 3: Deploy automatically

Shaped handles the infrastructure—training, scheduling, and auto-scaling your retrieval endpoint. Ship production-ready agent memory without managing vector indices or inference servers.

Whether you are building a customer support bot, a shopping assistant, or an internal research tool, they all share the same unified understanding of the user.

Solutions for every platform

Semantic search

Retrieve knowledge based on meaning using modern embedding models.

User history

Personalize responses based on past clicks, views, and chat sessions.

Hybrid retrieval

Combine keyword matching (BM25) with vector search for precise recall.

Metadata filtering

Apply hard constraints (availability, permissions) within the retrieval query.

Ready to fix your RAG pipeline?

Join engineering teams using Shaped to drive engagement and revenue.