The Many Flavors of Experiments

When companies talk about “experimentation” they often mean very different things. In recommendation systems, we typically see experiments fall into these categories:

- New Use Cases – Expanding beyond your homepage to, say, category pages or similar-item carousels.

- New Objectives – Shifting optimization from pure conversion to repeat purchases, average order value, or engagement.

- New Data Types or Sources – Moving from product data to content data, or from Amplitude events to Segment events.

- New Features – Creating derived data points (e.g., is weekend from a timestamp) to feed into models.

- New Models or Model Categories – Tweaking an existing model’s parameters vs. adopting an entirely new architecture.

Each of these comes with a different scope and cost in time, infrastructure, and cross-team collaboration.

Two Worlds: Offline vs. Online

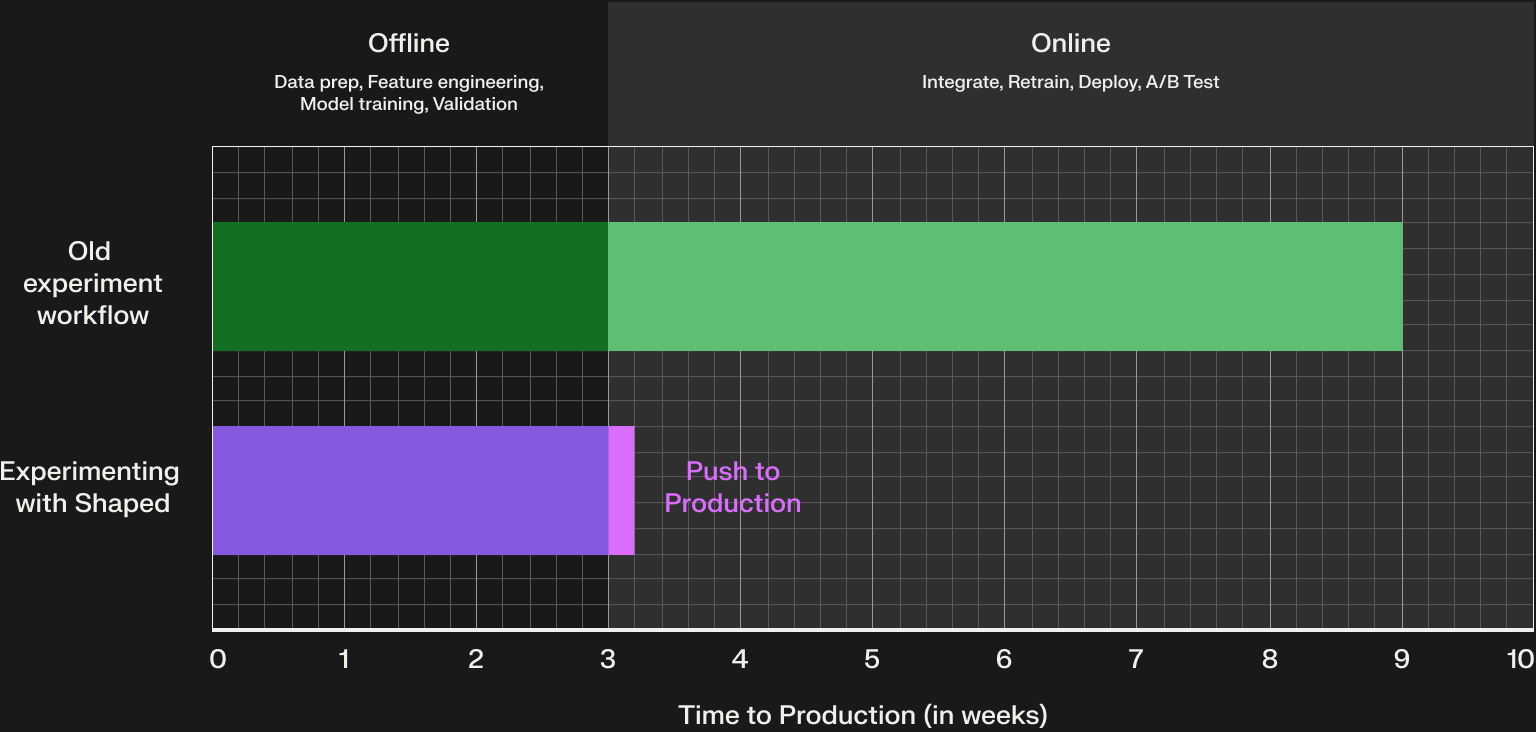

Most experimentation workflows have two major phases:

1. Offline experimentation This is your proving ground, running the model on historical data to see if it outperforms the current production system. You’ll split your dataset into training and validation sets, evaluate performance, and compare multiple candidate ideas. This phase might take 2–3 weeks, and it’s often exploratory and enjoyable for data scientists.

2. Online experimentation Here’s where the fun stops for many teams. Moving an offline win into production can require:

- Feature store integration

- Model retraining and redeployment

- Infrastructure changes for new model categories

- Setting up and running an A/B test

This can take anywhere from a few weeks to over a year, depending on complexity and maturity of your stack.

And this is where most of the slowdown happens.

The Research-to-Production Gap

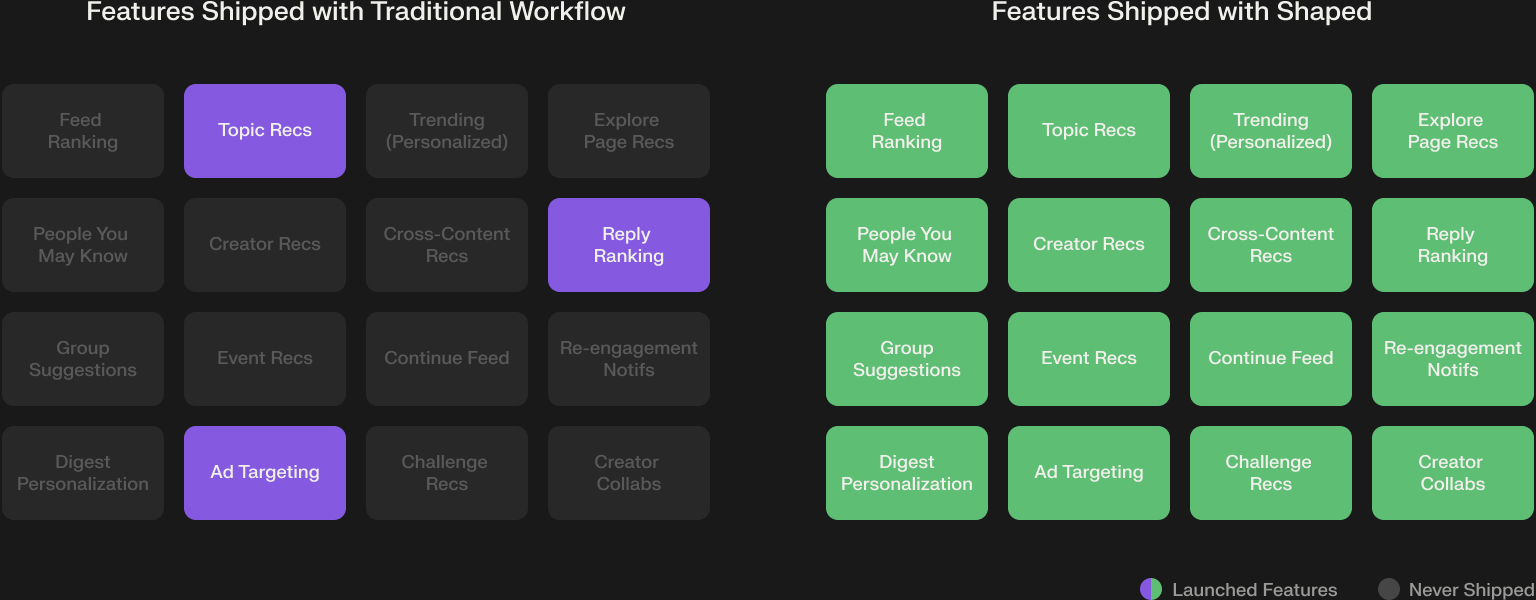

Industry research estimates that 80% of ML projects never make it to production. The reason? The research-to-production gap, the messy, political, and resource-intensive work of translating an offline model into a live experiment.

This gap often means teams only promote their top three experiments out of dozens of promising ones. Valuable ideas get left behind, not because they didn’t work, but because pushing them live was too costly.

How Shaped Changes the Game

Shaped was built to close this gap for recommendation and search systems. Our approach:

- Keep the offline phase familiar – Data scientists can still run their experiments in a comfortable, flexible environment.

- Make the online phase instant – Once an experiment is ready, you can deploy it to production with the push of a button. No separate handoff to another team. No months-long infrastructure project.

- Integrate the full stack – From data ingestion to feature engineering to online serving, we provide the infrastructure purpose-built for recommendation use cases.

- Support internal politics – Because deployment is so fast, you can test multiple stakeholders’ ideas back-to-back (or in parallel) and let the data decide.

The result? A dramatic increase in experimentation velocity, the speed from initial idea to measured impact.

Why It Matters

For product managers, this means strategic objectives like increase repeat purchase rate can translate into live experiments in weeks, not quarters. For ML engineers, it means you don’t have to watch your best ideas languish in a backlog. For leadership, it means a faster path to measurable business impact from your recommendation investments.

If you’re tired of losing good experiments to the research-to-production gap, Shaped can help you close it, and unlock the full potential of your team’s ideas.Book a demowith our experts to learn more.

.png)