Imagine an automated support agent that doesn’t just read the current ticket, but instantly recalls that this specific user experienced a similar API error three months ago, prefers Python code snippets over Node.js, and holds a "Tier 1" enterprise SLA. This isn't science fiction anymore; it’s standard architecture for forward-thinking engineering teams. By decoupling memory from the immediate context window, developers are building agents that feel less like calculators and more like colleagues.

This shift represents a massive opportunity. Instead of forcing users to repeat themselves, agents equipped with robust memory infrastructure can anticipate needs, personalize interactions continuously, and navigate complex, multi-turn goals without losing the thread. We are moving from stateless interactions to stateful, enduring intelligence.

What Is AI Agent Memory?

At its core, AI Agent Memory is the infrastructure that allows an autonomous system to store, index, and retrieve information over time, independent of the LLM's limited context window.

While "Context" is what the model sees right now, "Memory" is everything the model could know. It is the bridge between vast data lakes and the finite prompt.

Key trends defining this category in 2026 include:

- From Storage to Ranking: It's no longer enough to just store embeddings. The best tools now rank memories based on relevance, recency, and user intent, not just vector similarity.

- Real-Time "Learning": Agents need to remember actions taken seconds ago, not just yesterday. Streaming data pipelines are replacing batch ETL jobs for memory updates.

- Hybrid Recall: Pure vector search is too fuzzy for specific tasks. Leading tools combine semantic search (vectors) with lexical search (keywords) and structured metadata filtering (SQL) to ensure precision.

Who Needs Dedicated Agent Memory (and When)?

You might start by stuffing a few documents into a system prompt. But as your agent scales, you will hit an inflection point where a dedicated memory engine becomes a competitive advantage.

- The "Goldfish" Problem: If your users constantly have to remind the agent of previous instructions or preferences, you are ready for a memory layer.

- The Hallucination Trap: If your agent invents facts because it can't find the specific policy document buried in your knowledge base, you need better retrieval logic.

- The Personalization Opportunity: If you want your agent to treat a CEO differently than a junior developer based on historical interactions, you need a memory system that supports user-centric retrieval.

How We Chose the Best Agent Memory Tools

The market is noisy, filled with vector databases, orchestrators, and retrieval engines. We evaluated dozens of tools based on these five criteria:

- Retrieval Logic: Can the tool rank results based on business logic (e.g., popularity, recency), or does it only do basic vector math?

- Latency & Real-Time Updates: How fast does a new "memory" become searchable? (Seconds vs. Hours).

- Hybrid Capabilities: Does it support both keyword search (BM25) and vector search to handle specific identifiers like SKUs or error codes?

- Developer Experience: How easy is it to integrate into an existing agent loop (LangChain, AutoGPT, custom)?

- Filtering & Control: Can you strictly enforce data privacy rules (e.g., "only show memories belonging to User X")?

The 8 Best Tools for AI Agent Memory in 2026

1. Shaped

Quick Overview Shaped is an AI-native Retrieval Engine that fundamentally changes how agents access information. While most tools on this list are databases designed to store vectors, Shaped is an intelligence layer designed to rank them. It manages the entire memory lifecycle—connecting to live data streams, generating embeddings, and using advanced scoring models to decide exactly which memory is most relevant to the current user context.

Best For Engineering teams building production-grade agents (Customer Support, Sales, Personal Assistants) who need personalized, highly accurate retrieval without building complex custom pipelines.

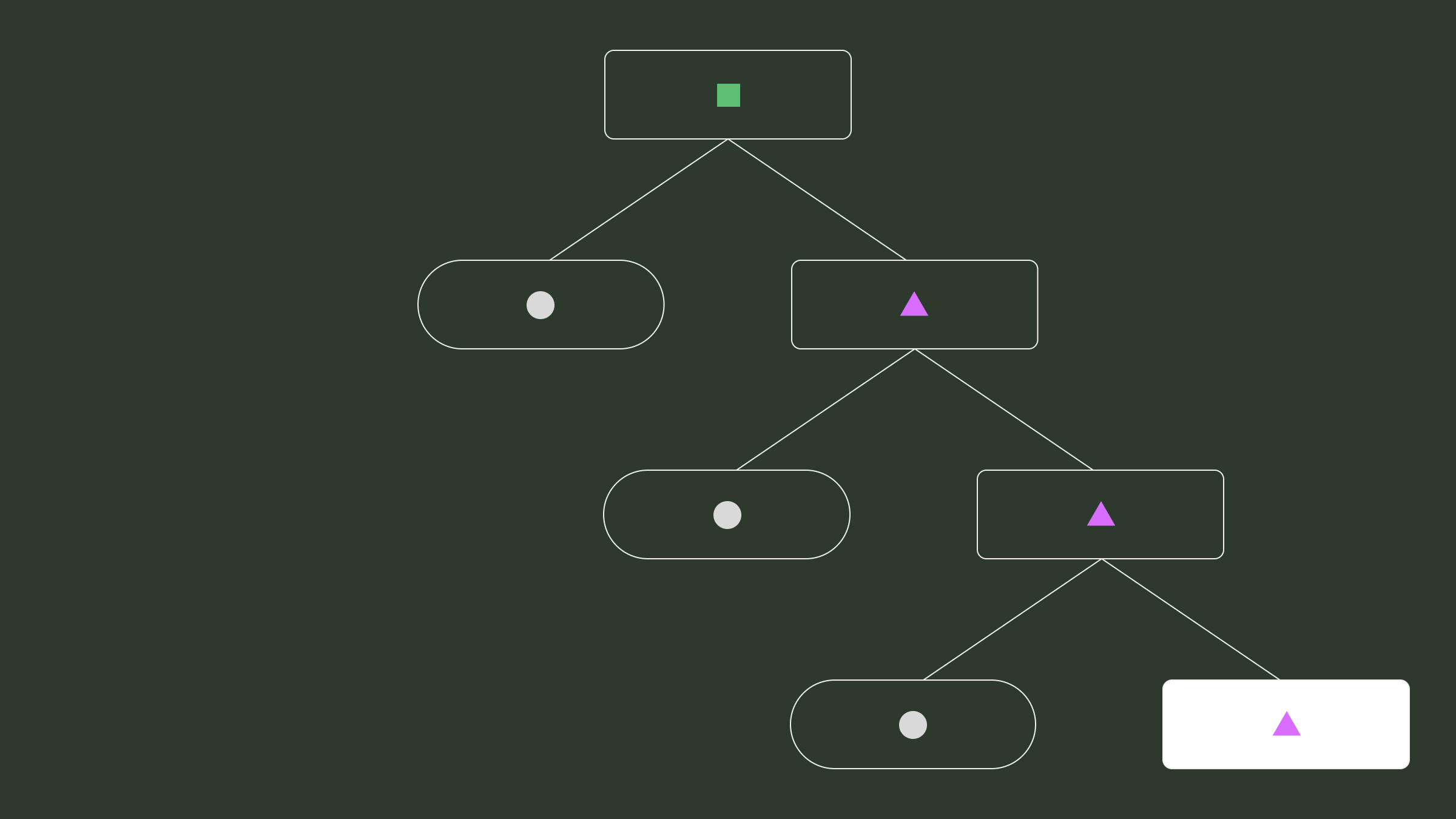

What Makes It Special Shaped differentiates itself by treating memory retrieval as a 4-Stage Pipeline: Retrieve, Filter, Score, and Reorder.

- Advanced "Scoring" Stage: This is the game-changer. Most vector DBs return results based solely on "cosine similarity" (math). Shaped allows you to apply Value Models using ShapedQL (a SQL-like language). You can write queries that say, "Find documents semantically similar to the prompt, BUT boost documents that are recent, and penalize documents that have low user ratings." This ensures the agent retrieves high-quality context, not just "similar" noise.

- Real-Time "Time Travel": Shaped connects directly to event streams like Segment, Kafka, and Kinesis. If a user clicks a button or sends a message, Shaped indexes that event in seconds. This gives agents immediate short-term memory of the session and long-term memory of the user's history simultaneously.

- User-Centric Retrieval: Shaped natively understands the concept of a "User." You can pass a user_id into the query, and the engine will use interaction pooling to bias retrieval results based on that specific user's past behavior. This creates an agent that feels like it "knows" the user instantly.

- Hybrid Search is Native: You don't have to choose between keyword search and vector search. Shaped runs both in parallel (text_search(mode='lexical') + text_search(mode='vector')) and fuses the results, ensuring you catch specific error codes (lexical) and broad concepts (semantic).

- ShapedQL Interface: Instead of learning a proprietary JSON syntax, developers use a SQL-like interface to query memory. This lowers the barrier to entry and makes complex logic readable.

Where It Falls Short

- Not a Generic Blob Store: Shaped is optimized for structured retrieval and ranking. If you just want to dump a billion unstructured text blobs without any user context or ranking logic, a raw vector DB might be cheaper (though less effective).

Pricing Free tier available. Usage-based monthly pricing. Contact Shaped for enterprise scale.

"We needed a solution that delivered the best user experience. After evaluating the RecSys landscape, Shaped was the clear choice." - Han Yuan, CTO, Outdoorsy

2. Pinecone

Quick Overview Pinecone is the industry standard for managed Vector Databases. It excels at providing a serverless, highly scalable infrastructure for storing and searching through billions of embeddings with low latency.

Best For Teams that need massive scale and raw storage performance, and who have the engineering resources to build their own ranking/retrieval logic on top of it.

What Makes It Special

- Serverless Architecture: Pinecone pioneered the "serverless vector DB." You don't provision shards or manage instances; you just write vectors and pay for what you use.

- Performance at Scale: It is battle-tested. If you have 100 million memories, Pinecone will return the nearest neighbors in milliseconds.

- Namespace Isolation: It allows you to partition data easily (e.g., one namespace per tenant), which is crucial for multi-tenant agent architectures.

- Broad Ecosystem: As a market leader, it integrates natively with almost every LLM framework (LangChain, LlamaIndex, OpenAI Assistants).

Where It Falls Short

- It is primarily a storage engine. It gives you the mathematical nearest neighbors. If you want to rank memories based on complex business logic (like "user intent" or "document popularity"), you often have to build a separate reranking microservice to process Pinecone's output.

Pricing Free tier available; Standard plan starts at roughly $70/month; Serverless pricing is usage-based.

3. Weaviate

Quick Overview Weaviate is an open-source, modular vector database that positions itself as an "AI-native" database. It is particularly famous for its robust Hybrid Search capabilities and modular architecture that can handle vectorization internally.

Best For Developers who want an open-source option with strong out-of-the-box support for combining keyword search with vector search.

What Makes It Special

- Built-in Vectorization: You don't always need an external embedding service. Weaviate modules (text2vec-openai, text2vec-cohere) can handle the embedding generation at the database level.

- Strong Hybrid Search: Weaviate has mature algorithms for fusing BM25 (keyword) and vector search scores. This is critical for agents that need to recall specific names or IDs that vector models often miss.

- GraphQL Interface: Unlike the REST/SQL approaches of others, Weaviate uses GraphQL, which allows for flexible data fetching and relationship traversal.

- Object-Centric: It stores data objects, not just vectors, allowing you to retrieve the actual content alongside the math.

Where It Falls Short

- Tuning the hybrid search parameters (alpha values) can be complex for teams new to search engineering.

- Hosting and managing the open-source version at scale requires significant DevOps overhead.

Pricing Open Source (Free); Weaviate Cloud Services (Serverless) starts at ~$25/month.

4. Mem0

Quick Overview Mem0 (formerly Embedchain) positions itself specifically as the "Memory Layer" for AI. Unlike generic vector DBs, Mem0 provides a specialized API designed to improve personalization by managing user, session, and agent-level memories intelligently. It uses LLMs to extract facts and preferences, storing them in a graph-like structure.

Best For Teams building personalized assistants (e.g., travel companions, tutors, coding assistants) where the primary goal is remembering specific facts about a user ("User likes aisle seats") rather than retrieving large documents.

What Makes It Special

- Multi-Level Memory Scopes: Mem0 natively distinguishes between User Memory (facts across all sessions), Session Memory (short-term context), and Agent Memory (agent knowledge).

- Graph Memory: It goes beyond vectors by detecting relationships between entities, allowing for more reasoning-heavy retrieval.

- Smart Ingestion: You don't just "upsert vectors." You mem0.add("I bought a red car"), and it extracts the relevant facts, updates the user profile, and deletes conflicting old memories automatically.

- Developer Ergonomics: The API is purpose-built for memory (.search(), .get_all()), reducing the boilerplate code required to manage history.

Where It Falls Short

- Latency & Cost: Because it often uses an LLM to extract and format memories during ingestion, it can be slower and more expensive than the raw indexing of tools like Shaped or Pinecone.

- Narrower Scope: Excellent for user profiles/facts, but less suited for high-volume retrieval of millions of documents or logs.

Pricing Open Source (Free); Managed Platform with usage-based pricing.

5. MemGPT

Quick Overview MemGPT isn't a database; it’s an Operating System for LLMs. It creates an abstraction layer that manages a "virtual context window," swapping information between "RAM" (context window) and "Hard Drive" (external vector storage) automatically.

Best For Developers building "infinite context" chat agents who want a framework to handle the logic of what to remember and when.

What Makes It Special

- Self-Editing Memory: Uniquely, MemGPT allows the agent to explicitly manage its own memory. The agent can decide to "write" a core fact to long-term storage or "yield" it to free up space.

- Event-Based Architecture: It treats user interactions as events, allowing for interruptibility and state management that mimics an OS process.

- Differentiates Memory Types: Distinctly handles "Working Context," "Recall Storage," and "Archival Storage," mirroring human cognitive architecture.

Where It Falls Short

- It is an orchestration framework, not a backend. You still need a database (like Postgres/pgvector or Chroma) underneath it to actually store the data.

- It introduces latency as the agent "thinks" about memory management steps.

Pricing Open Source (Free); Managed platform pricing varies.

6. LangChain / LangGraph

Quick Overview LangChain is the ubiquitous middleware for building AI applications. Its memory modules and LangGraph extension provide the orchestration logic to connect agents to vector stores, handling the flow of data "reading" and "writing."

Best For Teams already built on the Python/JS AI stack who need a standard interface to connect their agents to various storage backends.

What Makes It Special

- Universal Compatibility: If a database exists, LangChain has an integration for it. This prevents vendor lock-in.

- Memory Primitives: Provides ready-made classes for "ConversationBufferWindowMemory," "SummaryMemory," and "EntityMemory," allowing you to drop in complex memory logic with a few lines of code.

- LangGraph State Management: Enables the creation of cyclic, stateful agents where memory isn't just retrieval, but a persistent state passed between graph nodes.

Where It Falls Short

- Like MemGPT, this is the logic layer. It relies entirely on the performance and capabilities of the underlying database you choose to connect it to.

- Can become bloated; the abstractions sometimes hide performance bottlenecks in the retrieval step.

Pricing Open Source (Free); LangSmith (observability) has usage-based pricing.

7. Qdrant

Quick Overview Qdrant is a high-performance vector search engine written in Rust. It is beloved by engineers for its speed, reliability, and powerful Payload Filtering capabilities, which allow for complex querying alongside vector search.

Best For Performance-critical applications requiring strict filtering—e.g., "Find the most relevant memory, but ONLY if it belongs to this tenant, was created this week, and is tagged 'urgent'."

What Makes It Special

- Payload Filtering: Qdrant excels at pre-filtering. You can attach arbitrary JSON payloads to vectors and filter on them efficiently without degrading search performance.

- Rust Performance: Extremely efficient resource usage and low latency, even under high load.

- Flexible Deployment: Runs as a cloud service, a Docker container, or even directly embedded in Python code for local testing.

- Recommendation API: Includes built-in primitives for recommendation (positive/negative examples) beyond simple nearest neighbor search.

Where It Falls Short

- Focuses heavily on the "Search" mechanics. It lacks the higher-level "Intelligence" features (like learned scoring models or automatic diversity reordering) found in full Retrieval Engines like Shaped.

Pricing Open Source (Free); Managed Cloud has a free tier, then starts ~$25/month.

8. Chroma

Quick Overview Chroma is the "easy button" for AI memory. It is an open-source, AI-native embedding database designed to be the simplest way to add state to an LLM application, focusing heavily on developer experience (DX).

Best For Local development, prototyping, and hackers who want to get an agent with memory running in 5 minutes.

What Makes It Special

- Incredible DX: You can spin it up with pip install chromadb. No Docker required for basic use; it runs in-process.

- Automatic Embedding: If you don't provide vectors, Chroma uses a default embedding model to vectorize your text automatically. This removes a huge step for beginners.

- Lightweight: Perfect for testing ideas on a laptop before moving to a heavy cloud infrastructure.

Where It Falls Short

- While great for prototyping, scaling Chroma to handle production-level traffic or distributed agent workloads historically requires more DevOps effort than managed platforms like Shaped or Pinecone.

Pricing Open Source (Free); Managed service in early access.

Summary Table

Upgrade your agent's memory with Shaped → Start free today

Why Shaped Is Sprinting Ahead

While vector databases solved the storage problem for AI, the challenge in 2026 has shifted to ranking.

An autonomous agent querying "What is the refund policy?" might find 50 contradictory documents in a standard vector database. A raw database returns the 5 documents with the most similar words—often leading to the agent citing an outdated policy from 2023 because it had high keyword overlap.

Shaped wins because it adds judgment to memory. By using a 4-stage pipeline (Retrieve → Filter → Score → Reorder), Shaped can filter out the deprecated policy via metadata, score the remaining documents based on which one is most frequently accessed by support staff (popularity signal), and ensure the result applies to the user's specific region.

For agents to be truly autonomous, they don't just need to remember facts; they need to understand which facts matter right now. That is the power of a Retrieval Engine over a database.

FAQs

What is the difference between Context and Memory? Context is the "working memory" of the LLM—the text currently inside the prompt window (e.g., the last 10 messages). Memory is "long-term storage"—vast amounts of data stored externally (in a database or retrieval engine) that the agent can search and retrieve when needed, expanding its knowledge base infinitely beyond the token limit.

Do I really need a dedicated tool, or can I just use arrays in Python? For a toy script, arrays work. But semantic search (vectors) allows an agent to find information based on meaning, not just exact keyword matches. As soon as your data exceeds a few dozen documents, or if you need the agent to "remember" user preferences across sessions, a dedicated retrieval tool is essential for performance and accuracy.

Is Shaped a Vector Database? Shaped includes vector storage capabilities, but it is better defined as a Retrieval Engine. A vector database focuses on storing and searching vectors. Shaped focuses on the logic of ranking: ingesting data, filtering it, scoring it with machine learning models, and reordering it for the agent. It replaces the code you would otherwise have to write on top of a vector database.

How does Real-Time RAG work? In traditional RAG, data is updated in nightly batches. If a user buys a product, the agent might not "know" about it until tomorrow. Real-Time RAG, enabled by tools like Shaped, uses streaming connectors (Kafka, Kinesis, Webhooks) to index that purchase event instantly. This allows the agent to answer questions like "Where is my order?" seconds after the transaction occurs.

What is the "Cold Start" problem in agents? This happens when a new user interacts with an agent for the first time. The agent has no history to personalize the experience. Advanced tools handle this via attribute-based retrieval. For example, Shaped can look at the user's profile (e.g., "CTO in New York") and retrieve memories or documents that similar users found useful, ensuring the agent is smart and helpful from the very first interaction.

How does Mem0 differ from Shaped? Mem0 is excellent for storing specific facts about a user (e.g., "User is allergic to peanuts"). It excels at building a personal profile. Shaped excels at retrieval over large datasets (e.g., "Find the best 5 recipes for a user allergic to peanuts"). Mem0 stores the fact; Shaped uses that fact to rank thousands of content items efficiently.