The Feedback Loop: The Data and Evaluation Engine

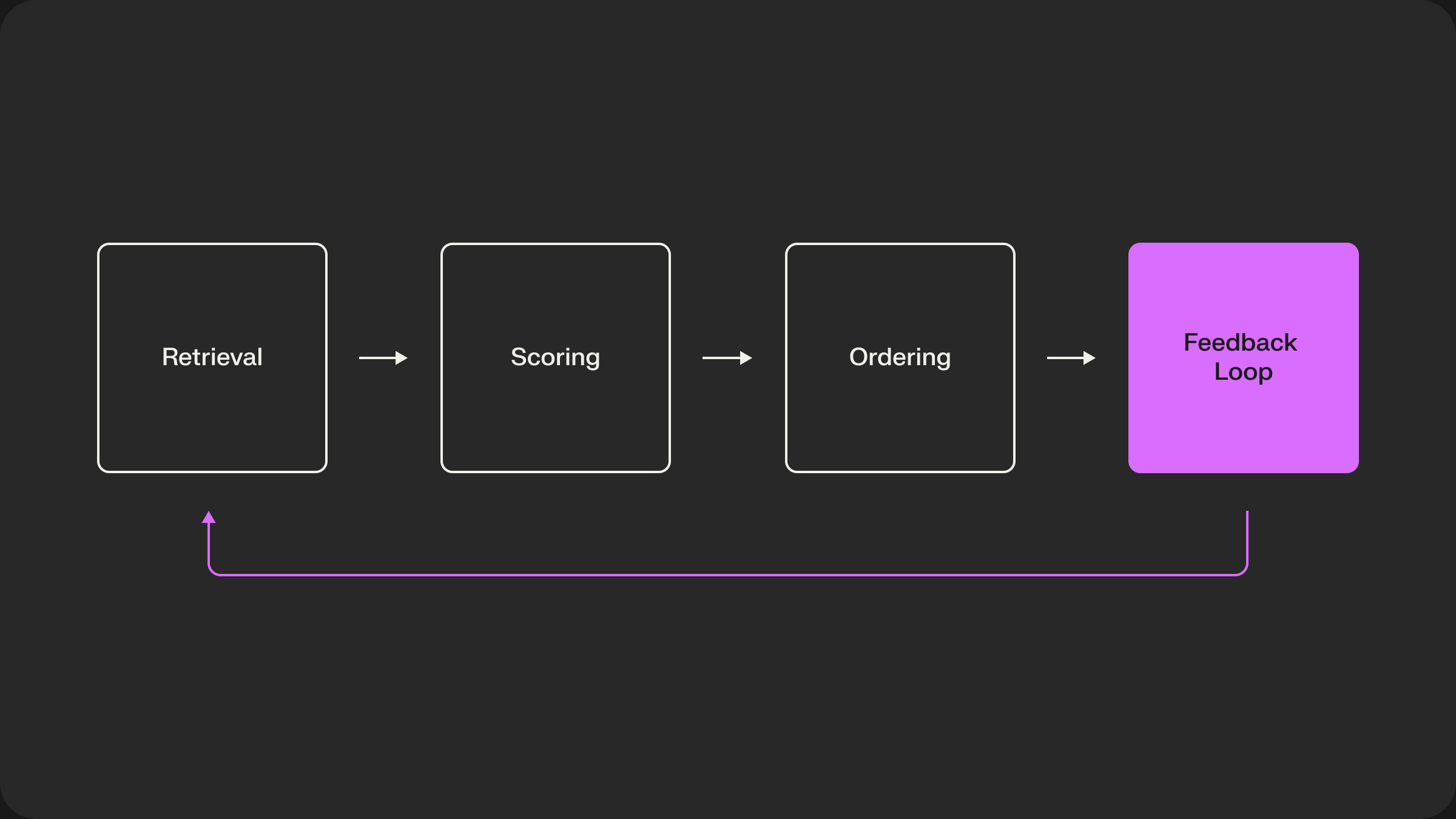

- In Part 1, we established the multi-stage architecture.

- In Part 2, we covered the Retrieval Stage, where we generated a high-recall candidate set.

- In Part 3, we dove into the Scoring Stage, where we assigned precise, pointwise scores to each candidate.

- In Part 4, we explored the Ordering Stage, where we applied listwise logic like diversity and exploration to construct a final page.

A recommender system that only serves results is a static, unintelligent system. The true power of a modern recommender lies in its ability to learn from its own outputs. It is a data product, a living system that is constantly being shaped by the interactions of its users.

In this final post, we will "zoom out" from the online request path and look at the infrastructure that powers this learning: the feedback loop and the evaluation engine. This is the circulatory and nervous system of the recommender, responsible for processing user feedback and measuring whether our changes are actually making the product better.

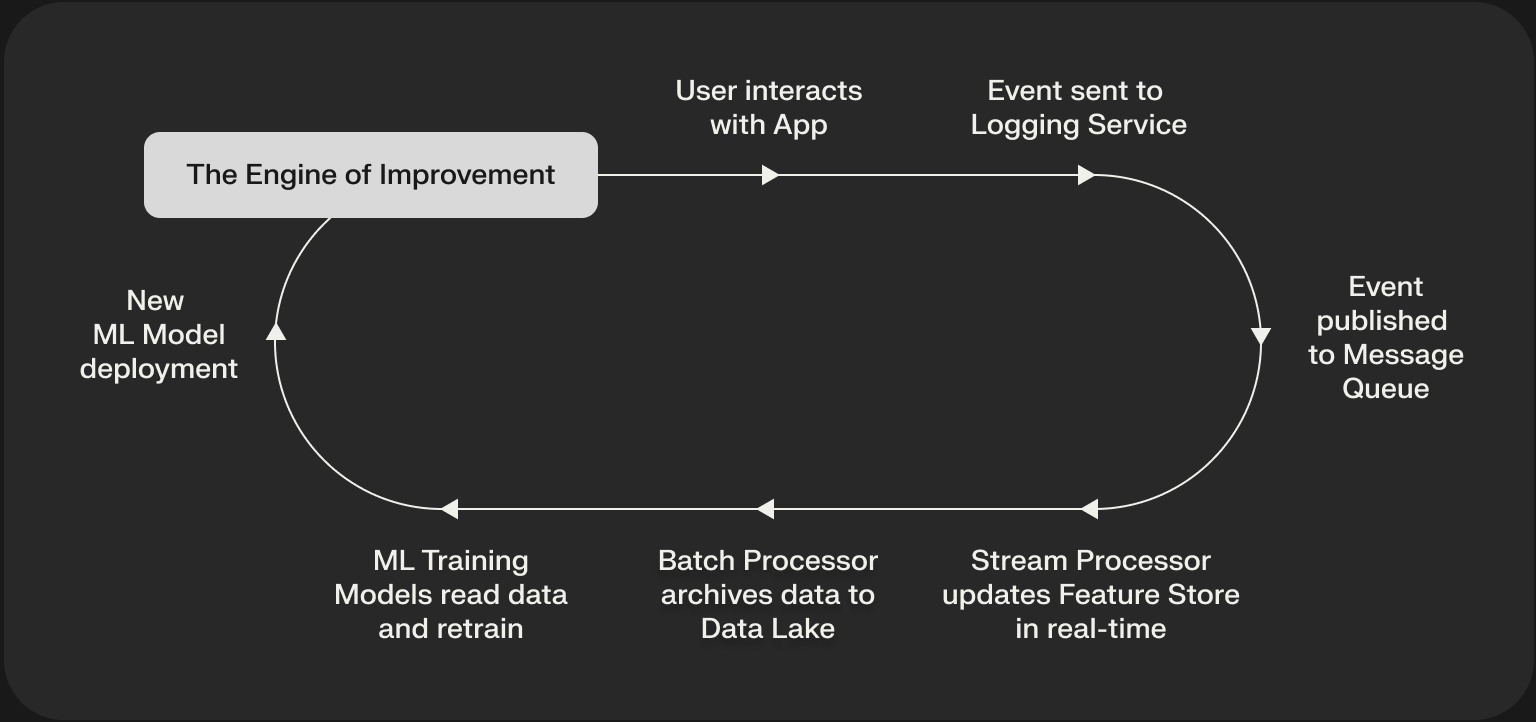

The Feedback Loop: The Engine of Improvement

The feedback loop is the data pipeline that turns user interactions into training signals for the next generation of our models. It is a continuous, cyclical process that connects the online serving system to the offline training world.

Let's break down the key components of this loop:

- Event Logging:

- Every meaningful user interaction—impressions, clicks, watches, purchases, likes, shares—is logged as a structured event. These logs are the raw material of learning. A typical log for a clicked item might contain (

user_id,item_id,timestamp,request_id,model_version). - Streaming & Batch Pipelines: These raw events are published to a real-time message queue like Apache Kafka. From there, the data flows down two parallel paths:

- Streaming Path: A stream processor like Flink or Spark Streaming consumes events in real-time to compute fresh, short-term features (e.g., "number of clicks by this user in the last 5 minutes"). These features are written to the low-latency Online Feature Store.

- Batch Path: Events are archived in bulk to a data lake or warehouse (e.g., S3, BigQuery). This creates a historical record of all interactions.

- Training Data Generation: On a periodic basis (e.g., daily), a batch job runs on the data lake to generate the training datasets for our models. This job joins the raw interaction logs with feature data to create the labeled examples our models need (e.g., "at this time, for this user with these features, we showed this item with these features, and they clicked").

- Model Retraining: The machine learning training pipeline consumes this new data to train updated versions of all our models—from the Two-Tower retrieval models to the pointwise scorers.

- Deployment: The newly trained models are validated and then deployed back to the production serving environment, completing the loop.

The cadence of this loop determines how quickly the system can adapt. Some systems retrain daily, while others have moved towards near-real-time learning, where models are updated intra-day.

Evaluation: Knowing if It's Working

A recommender system has a huge number of moving parts. How do we know if a new model, a change to the ordering logic, or a new feature is actually improving the user experience? We need a rigorous evaluation framework. Like the data pipeline, this framework has two parts: offline and online.

Offline Evaluation: The Sanity Check

Before deploying a new model, we need to have some confidence that it's better than the current one. Offline evaluation provides this sanity check by testing the model on a historical, held-out dataset.

Key offline metrics for ranking include:

- Precision@K: What fraction of the top K recommended items were actually relevant? Simple and interpretable.

- Recall@K: Of all the relevant items in the test set, what fraction did we find in our top K recommendations? Crucial for the Retrieval stage.

- Mean Average Precision (MAP): A measure that rewards putting relevant items higher up in the list.

- Normalized Discounted Cumulative Gain (NDCG): The workhorse of ranking evaluation. It's similar to MAP but more sophisticated, as it can handle graded relevance (e.g., a "purchase" is more relevant than a "click") and applies a logarithmic discount to the relevance of items further down the list.

It's critical to create your offline test set by splitting your data by time. For example, you might train on data from Monday to Saturday and test on data from Sunday. This simulates the real-world scenario where you are predicting future interactions based on past data.

However, offline evaluation has a fundamental limitation: the offline/online gap. A model that performs better on a static, historical dataset will not always perform better with live users. This is because the offline data is biased by what the previous version of the system chose to show. Your new model might be great at recommending items that the old system never surfaced, an improvement that offline metrics would never be able to measure.

Online Evaluation: The Ground Truth

Because of the offline/online gap, the only way to truly know if a change is an improvement is to test it on live traffic. A/B testing is the gold standard for online evaluation.

The process is simple in concept:

- Split Users: Randomly segment users into groups.

- Assign Treatments: The "control" group sees the existing, production version of the recommender. The "treatment" group sees the new version with your proposed change (e.g., the new scoring model).

- Measure and Compare: Collect data on the key business metrics for both groups over a period of time (e.g., one to two weeks) and check for a statistically significant difference.

The choice of metrics here is crucial. We move beyond model-centric metrics like NDCG and look at the high-level business KPIs that the recommender is intended to drive:

- Engagement Metrics: Click-through rate, session length, number of interactions per user.

- Business Metrics: Conversion rates, gross merchandise value (GMV), subscription rates.

- Long-Term Health Metrics: User retention, diversity of consumed content, un-follow or "show less of this" rates.

A successful change is one that moves these key business metrics in a positive direction. Only after a successful A/B test is a new model or feature promoted to serve 100% of traffic, becoming the new "control" for the next experiment.

Series Conclusion: An Ever-Evolving System

We've now journeyed through the entire anatomy of a modern recommender system. We've seen that it's not a single model, but a complex, multi-stage architecture of cascading approximations. It's an ensemble of retrievers to cast a wide net, a set of precise scoring models to find the signal in the noise, and a final ordering stage to apply the nuanced logic of product design.

Most importantly, it's a living system, powered by a continuous feedback loop of user interactions and governed by a rigorous evaluation framework. Building one is a journey through the trade-offs between relevance, latency, and cost, and between the art of feature engineering and the science of model architecture.

This blueprint is not static. As new modeling techniques emerge and user expectations evolve, so too will the architecture. But the fundamental principles—of multi-stage ranking, of the online/offline split, and of data-driven iteration—will remain the bedrock of how we connect people with the content and products that matter to them.